How to Master MCP Server Composition Patterns

MCP server composition patterns help developers combine multiple Model Context Protocol servers into connected toolsets for AI agents. Instead of building one giant server for every tool and data source, you connect specialized servers for storage, compute, and APIs. This modular setup reduces integration time by 70%.

What Are MCP Server Composition Patterns?

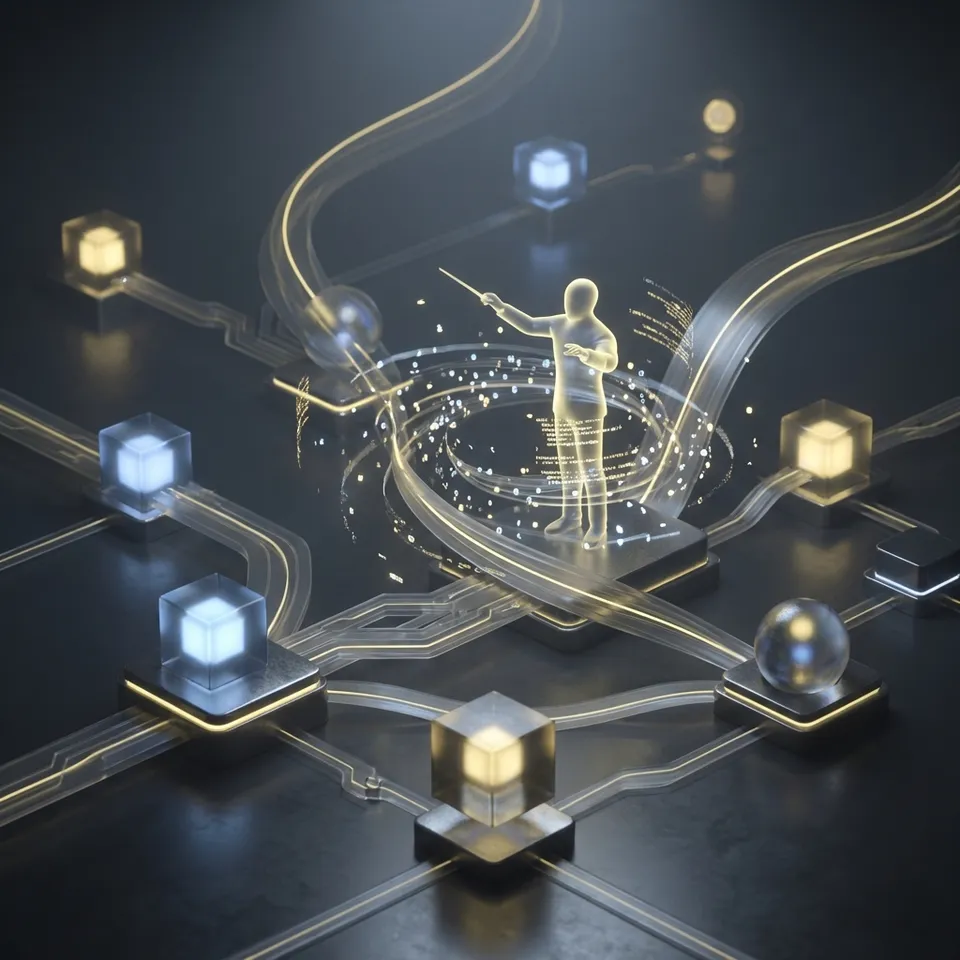

MCP server composition patterns are blueprints for connecting multiple Model Context Protocol (MCP) servers into a single system for AI agents. Instead of building one server that handles file storage, database access, and third-party APIs, developers use these patterns to organize specialized micro-servers.

In a composed architecture, your agent might connect to a "FileSystem" server for reading documents, a "Postgres" server for querying customer data, and a "Stripe" server for processing payments at the same time. The agent sees a unified list of tools, but the implementation is distributed.

Why Monolithic MCP Servers Fail

Early adopters of the Model Context Protocol often tried to wrap their entire backend into a single MCP server. This "monolith" approach quickly becomes unmanageable:

- Dependency Hell: A server needing both

ffmpegfor video andpgfor databases becomes bloated and hard to deploy. - Security Risks: A single compromised server exposes all tools and data.

- Scaling Bottlenecks: You can't scale the video processing part without scaling the database part.

The Evolution to Composed Architectures

Production-grade AI agents now use an average of 4.5 separate MCP servers to complete complex workflows. By separating capabilities, teams can iterate faster. A platform team can maintain the "Infrastructure Server" while a product team works on the "Feature Server," with no coordination needed beyond the standard MCP interface.

The 4 Essential Composition Patterns

Building a reliable multi-server system requires choosing the right structure. These four patterns cover most AI agent use cases, from simple local scripts to enterprise-grade autonomous systems.

1. The Mesh Pattern (Federation)

In the Mesh pattern, the AI client (like Claude Desktop or a custom agent) connects directly to multiple MCP servers. The client maintains all connections and decides which server offers the right tool for the job.

Use Case: Ideal for local development and personal productivity agents where the user controls the environment. Pros:

- Direct Connection: No middleware or proxy servers to slow down tool execution.

- Simplicity: Easy to set up with standard clients like Claude Desktop.

- Decentralized: No single point of failure; if one server goes down, others still work. Cons:

- Client Complexity: The client must manage auth and connections for N servers.

- Security Gaps: Hard to enforce consistent policies across different servers.

2. The Gateway Pattern (Aggregator)

A single "Gateway" MCP server acts as the entry point for the agent. This gateway sits in front of multiple backend MCP servers, combining their tools and resources into a single list. The agent connects only to the Gateway.

Use Case: Best for enterprise deployments where security, logging, and access control must be centralized. Pros:

- Unified Interface: The agent sees one endpoint, simplifying client configuration.

- Centralized Security: Enforce auth, rate limiting, and logging in one place.

- Abstraction: You can swap backend servers without changing the agent's config. Cons:

- Bottleneck Risk: The Gateway becomes a single point of failure and potential performance bottleneck.

- Complexity: Requires building and maintaining a custom routing layer.

3. The Sidecar Pattern

Inspired by Kubernetes sidecars, this pattern attaches a lightweight MCP server directly to a specific application or service. The server provides an interface only for that service. For example, a legacy SQL database might have a "SQL MCP Sidecar" that translates natural language requests into SQL queries.

Use Case: Perfect for updating legacy applications with AI capabilities without rewriting core logic. Pros:

- Isolation: Failures in the sidecar don't crash the main application.

- Proximity: Low latency communication between the agent and the specific service.

- Modularity: Teams can deploy updates to the sidecar independently of the main app. Cons:

- Resource Overhead: Running a sidecar for every service increases infrastructure costs.

- Management: Harder to manage updates across hundreds of sidecars.

4. The Hierarchical Pattern (Router)

For complex systems, servers are organized in a tree structure. A "Root" server offers high-level tools (e.g., "Onboard Employee") and delegates the actual work to specialized "Leaf" servers (e.g., "Create Email Account", "Provision Laptop").

Use Case: Necessary for autonomous agents that need to break down high-level goals into executable steps. Pros:

- Encapsulation: Hides system complexity from the agent, preventing context window overload.

- Specialization: Leaf servers can be highly optimized for specific tasks.

- Reusability: High-level skills can be composed of different low-level tools depending on context. Cons:

- Latency: Multiple hops between root and leaf servers can slow down execution.

- Debugging: Tracing errors through a multi-level hierarchy is difficult.

Orchestrating Multi-Server Workflows

Once you have multiple servers, the challenge shifts to orchestration. How do you ensure data flows correctly from one server to another?

Chaining Tools Across Servers A common workflow involves fetching data from one server, processing it on another, and saving it to a third.

- Step 1: Agent calls

database_fetchUser(Database Server). - Step 2: Agent calls

compute_analyzeRisk(Compute Server) passing the user data. - Step 3: Agent calls

fs_saveReport(Storage Server) to persist the results.

Handling Failures In a distributed system, partial failures are inevitable. If the Compute Server crashes after fetching data, your agent needs a retry strategy.

- Idempotency: Ensure tools like

fs_saveReportcan be called multiple times without corrupting data. - State Checkpointing: Save intermediate state to a persistent layer (like Fast.io) so the agent can resume workflows after a crash.

Managing State with a Shared Storage Backbone

One of the hardest problems in composed MCP architectures is state management. If your "Video Editor" server creates a file, how does your "YouTube Uploader" server access it? Sending large files as base64 strings through the LLM context window is slow and expensive.

The Shared Storage Pattern The solution is to use a dedicated, shared storage layer that all servers can access. Fast.io handles this role in the MCP ecosystem.

How It Works:

- Reference Passing: Instead of passing file content, servers pass file paths or URLs.

- Central Repository: All servers mount the same Fast.io workspace. The "Video Editor" writes to

/processed/video.mp4. - Quick Access: The "YouTube Uploader" reads from

/processed/video.mp4. Because Fast.io is a global edge storage network, this handoff is fast. - File Locking: Fast.io provides file locking capabilities, preventing the Uploader from reading the file while the Editor is still writing it.

This approach turns your storage layer into the "bus" that connects all your specialized tools.

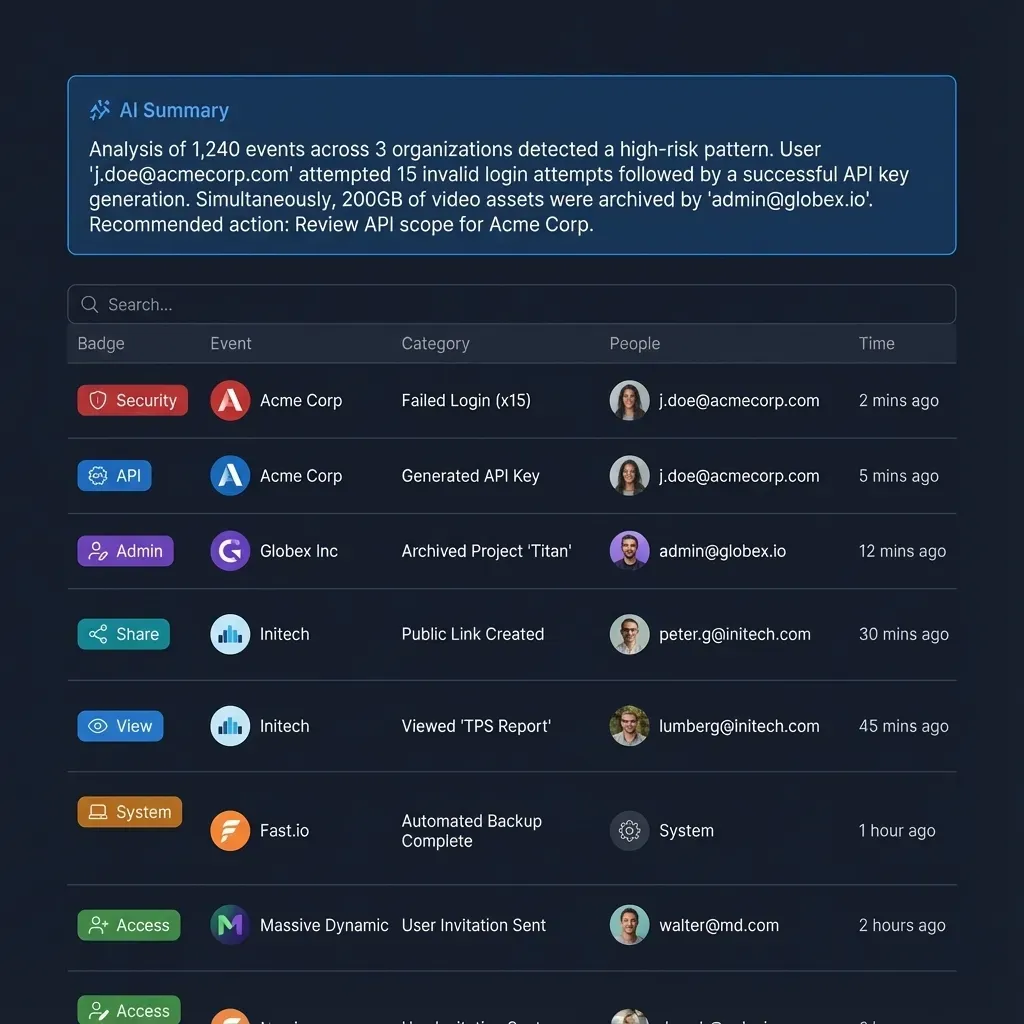

Security and Governance in Composed Systems

Adding more servers increases the attack surface. Security in a composed MCP environment relies on the "Principle of Least Privilege" applied at the server level.

Identity Propagation When an agent connects to a Gateway, the Gateway must pass the user's identity downstream. Avoid using a single "super-admin" key for your Gateway. Instead, use scoped tokens or OAuth delegation to ensure the Gateway can only perform actions authorized for that specific user.

Network Isolation Not all MCP servers need to be public.

- Public Gateway: Expose only your Gateway to the internet (secured via mutual TLS or API keys).

- Private Mesh: Run your database, file system, and internal API servers in a private VPC. The Gateway connects to them over a secure internal network, shielding them from direct external attacks.

Centralized Audit Logging You need to know what your agents are doing. Centralized logging is essential.

- Tool Logs: Record every tool execution, arguments, and result status.

- File Logs: Fast.io tracks all file operations, creating an immutable audit trail. You can see exactly which agent accessed "Q3_Financials.pdf" and when.

How to Implement a Simple MCP Gateway

Building a Gateway is a good way to start with advanced composition. Here is a conceptual workflow for a Node.js MCP Gateway that aggregates tools from multiple downstream servers.

Step 1: Define the Registry

Create a configuration file (e.g., services.json) listing your downstream servers and their transport endpoints (SSE URLs or Stdio commands).

{

"servers": {

"storage": "http://localhost:3001/sse",

"payments": "http://localhost:3002/sse"

}

}

Step 2: Tool Aggregation

On startup, your Gateway acts as a client. It connects to every server in the registry and calls their listTools endpoint. It then merges these lists into a single tools array.

- Tip: Namespace your tools (e.g.,

storage_listFiles,payments_createCharge) to prevent naming collisions if two servers offer a tool with the same name.

Step 3: Request Routing

Implement the callTool handler. When the agent requests payments_createCharge, your Gateway parses the request, identifies the prefix (payments), and forwards the call to the actual Payment Server. It then relays the response back to the agent.

This simple proxy architecture allows you to add, remove, or upgrade backend servers without ever disconnecting or reconfiguring your AI agent.

Frequently Asked Questions

Can I use multiple MCP servers with Claude Desktop?

Yes. Claude Desktop natively supports the Mesh pattern. You can list multiple servers in your `claude_desktop_config.json` file under the `mcpServers` object. Claude will connect to all of them and present a unified list of tools to the model.

What is the main benefit of composing MCP servers?

Composition drives reusability and maintainability. You can build a generic 'Google Drive' MCP server once and reuse it across ten different agent applications, rather than hard-coding Google Drive logic into every single bot.

How do I handle authentication in a multi-server setup?

Use a centralized authentication service to issue short-lived tokens. Your Gateway should validate the initial token, and then pass scoped credentials to downstream servers. Alternatively, use a service mesh like Istio to handle service-to-service auth transparently.

Does Fast.io support custom MCP server composition?

Yes. Fast.io works as a storage component for any composed architecture. Whether you use a Gateway, Mesh, or Hierarchical pattern, Fast.io provides the persistent storage, file locking, and audit trails needed for reliable multi-server coordination.

What is the difference between an MCP Gateway and an API Gateway?

An API Gateway routes raw HTTP requests (GET, POST) to endpoints. An MCP Gateway routes semantic MCP messages (listTools, callTool, listResources) and aggregates capabilities so that multiple distinct servers appear as a single logical toolset to the AI.

How does latency affect composed MCP systems?

Latency can accumulate if you chain many servers together (e.g., Gateway -> Router -> Leaf). To minimize this, use persistent connections (SSE) instead of polling, keep servers in the same region, and use caching for resource-heavy operations.

Can I mix local and remote MCP servers?

Yes, this is a powerful hybrid pattern. You can run a local MCP server for sensitive file operations on your laptop while connecting to a remote MCP server for cloud computations. Your agent manages tasks across both environments.

Related Resources

The Universal Storage Layer for Composed Agents for mcp server composition patterns

Connect your entire mesh of MCP servers to a single, fast storage backbone. Get 50GB of free persistent storage for your agents today.