How to Optimize Token Costs for AI Agents

Running autonomous AI agents gets expensive. Production sessions can cost $10-100+ if you aren't careful. This guide covers how to cut token usage and infrastructure costs, using Retrieval-Augmented Generation (RAG), prompt caching, smart model routing, and efficient file storage. We'll look at the economics of agent workflows and provide strategies to reduce spend by over 80%. This guide covers ai agent token cost optimization with practical examples.

How to implement ai agent token cost optimization reliably

Optimizing token costs involves reducing LLM API spending. Key techniques include prompt compression, context windowing, caching, model routing, and grounding agents with RAG. This avoids stuffing full documents into prompts. Individual API calls look cheap. They often cost fractions of a cent per 1,000 tokens. However, agents execute dozens of steps, loop through logic chains, and perform large data lookups to finish a single task. The costs break down into three main categories:

- Input Tokens: The context you feed the model (chat history, documents, system prompts). In complex agent workflows, this context grows with every turn. * Output Tokens: The text the model generates. These usually cost 3x to 10x more than input tokens. * Infrastructure & Storage: The cost of vector databases, embedding generation, and file storage. These often get overlooked but scale linearly with your data. Poor context management causes most high AI costs. It often accounts for 60-70% of total spend.

The Compound Interest of Multi-Step Workflows

Consider a "Researcher Agent" tasked with summarizing a 50-page PDF (approx. 25,000 tokens). 1. Naive Approach: The agent loads the full PDF into the context window for every question. 2. The Multiplier: If the agent takes 5 steps to refine its answer, asking itself follow-up questions, it re-sends those 25,000 tokens 5 times. 3. The Result: A single task consumes 125,000+ input tokens. On a high-end model like GPT-4o, this could cost over $0.60 for just one document. Multiply that by 1,000 documents, and you have a $600 bill for a simple summarization job. Fixing this workflow can bring that cost down to pennies.

Helpful references: Fast.io Workspaces, Fast.io Collaboration, and Fast.io AI.

What to check before scaling ai agent token cost optimization

To cut input costs, stop loading entire documents into the context window. Instead of loading a 50-page PDF into the prompt for a simple query, use Retrieval-Augmented Generation (RAG). This fetches only the relevant paragraphs. RAG reduces token usage by 60-80% compared to reading full documents. By getting only the necessary chunks of information, you keep your context window small and focused.

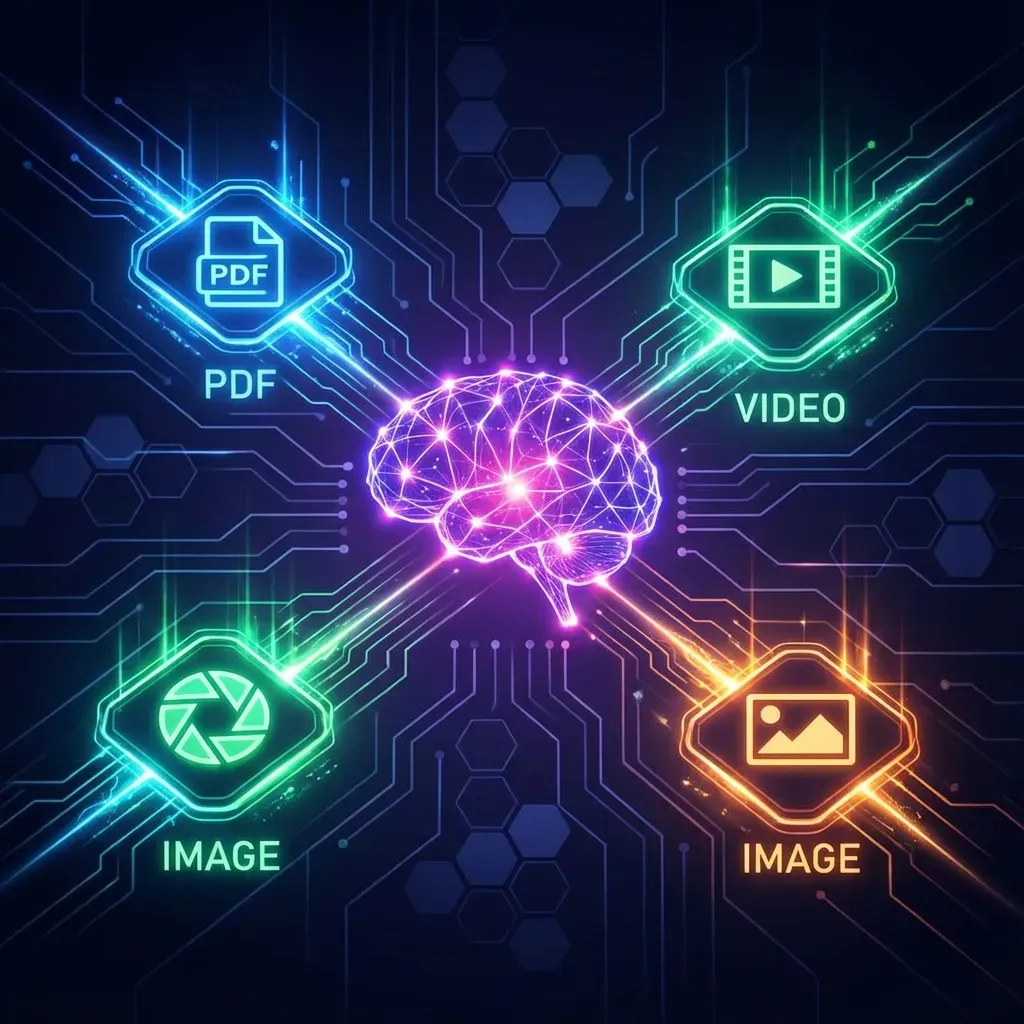

How RAG Works Under the Hood

- Chunking: Your documents are split into small pieces (e.g., 500 tokens). 2. Embedding: These chunks are converted into numerical vectors that represent their meaning. 3. Retrieval: When an agent asks a question, the system finds the vectors closest to the query. 4. Generation: Only those top 3-5 relevant chunks go to the LLM.

RAG vs. Long Context Windows

Modern models offer "1 million token context windows." This tempts developers to "stuff everything in." While convenient, this gets expensive for production agents. * Cost: Processing 1M tokens costs roughly $2.50 to $15.00 per call depending on the model. * Accuracy: "Lost in the middle" phenomenon means models often forget details buried in the middle of massive contexts. * Latency: Processing huge contexts takes longer. This leads to slow agent responses.

How Fast.io Helps: Fast.io's Intelligence Mode includes built-in RAG capabilities. When you enable it on a workspace, the system automatically indexes all files. Your agents can query the data using natural language without you needing to build pipelines, manage a separate vector database, or pay for external embedding generation.

Strategy 2: Intelligent Model Routing

Not every decision needs the advanced model. Using a top-tier model like GPT-4o or Claude 3.5 Sonnet for simple classification tasks is overkill. It's like hiring a PhD to make coffee. Use a tiered model architecture that routes tasks based on complexity:

- Router Layer: Use a cheap, fast model (like GPT-4o-mini or Claude Haiku) to analyze the user's intent. This model decides which "expert" needs to handle the request. * Worker Layer: Send simple tasks (formatting, summarization, keyword extraction, sentiment analysis) to smaller, cheaper models. * Expert Layer: Send complex reasoning, coding, creative writing, or detailed analysis tasks to the flagship models. This way, you only pay high prices for hard problems. It lowers your average cost per token by 40-50%.

Example Router Logic

Your router prompt might look like this:

"Analyze the user's request. If it requires complex reasoning or coding, output 'expert'. If it is a simple factual question or formatting task, output 'worker'."

If 80% of your traffic is simple queries, routing them to a model that is 30x cheaper saves budget.

Strategy 3: Use Prompt Caching

Many AI agents use long system prompts. These define their persona, tools, and rules. You send these identical instructions with every request. Prompt caching lets the LLM provider (like Anthropic or OpenAI) remember the start of your prompt. You don't pay full price to re-process those tokens for every turn. Instead, you pay a discounted "read" rate for cached tokens.

Best Practices for Maximizing Cache Hits

To use caching effectively, structure your prompts carefully. The cache works by matching the prefix of your prompt. 1. Static Content First: Put your system instructions, persona definitions, and few-shot examples at the beginning. These rarely change. 2. Heavy Context Second: If you are analyzing a specific large document for a session, place it next. 3. Dynamic Content Last: Put the user's specific query and the conversation history at the end. This changes every turn and cannot be cached.

When to Use Prompt Caching vs. RAG

- Use RAG when you have a massive library of documents (GBs or TBs) and need to find specific needles in the haystack. * Use Prompt Caching when you have a single large context (e.g., a 100-page book or a large codebase) that the agent needs to reference holistically and repeatedly during a conversation.

Strategy 4: Optimize Storage and Retrieval

Vector databases work well but get expensive. You pay for storage, indexing compute, and often the queries themselves. For many workflows, a hybrid approach using efficient file storage costs less.

Storage-First Approach

Don't chunk and embed everything into a vector DB. That creates a redundant copy of your data. You then have to pay to store and sync it. Instead, keep your raw data in an optimized file storage system. Use an agent that can read file metadata to find information. Only use vector search when you strictly need semantic retrieval.

Fast.io's Advantages for Agents

Fast.io offers a dedicated tier for AI agents with 50GB of free storage. This lets agents keep a large "long-term memory" of files, such as PDFs, videos, code, and datasets, without the high monthly fees of vector hosting services. More importantly, the Fast.io MCP Server allows agents to perform highly efficient file operations:

- Read Specific Lines: An agent can read lines 50-100 of a log file without downloading the whole gigabyte. * Byte-Range Requests: Agents can extract headers or specific data chunks efficiently. * Search via Metadata: Agents can filter thousands of files by date, type, or custom tags. This finds the right file before spending a token on reading content.

Strategy 5: Control Output Verbosity

Output tokens often cost 3x to 10x more than input tokens. Controlling how much your agent writes is key. A "chatty" agent that writes polite preambles ("! I would be happy to help you with that analysis...") burns money.

Techniques for Conciseness:

- Use

max_tokens: Set a hard limit on response length. This stops runaway loops where a model might repeat itself. * Prompt for Brevity: Tell the model in the system prompt: "Be concise. No filler words. No pleasantries. Reply with data only." - Structured Output (JSON): Forcing the model to output strict JSON schemas often results in shorter, more predictable responses than free-form text. It eliminates "fluff" and ensures you get exactly the data fields you need.

Managing "Chain of Thought" Costs

"Chain of Thought" (CoT) asks the model to "think step-by-step." This improves reasoning but increases token usage. * Selective CoT: Only ask for step-by-step reasoning for complex problems. * Hidden CoT: Some newer models allow you to hide the "thinking" tokens or offer them at a discount. * Summarized CoT: Ask the model to "think silently" (if supported) or to output only the final result for intermediate steps in a workflow.

Strategy 6: Batch Processing for Non-Urgent Tasks

Does your agent need to answer right now? If you run background jobs, like categorizing a week's worth of support tickets or analyzing nightly logs, you don't need real-time latency.

Use Batch APIs

Major LLM providers like OpenAI offer Batch APIs. These allow you to send a file of thousands of requests and receive the results within 24 hours. * The Benefit: Batch APIs often come with a 50% discount on token costs. * The Trade-off: You wait hours instead of seconds. For high-volume workflows, switching to batch processing is the most effective way to cut your bill. It works without changing your prompt logic or model choice.

Monitoring and Alerts

You can't fix what you don't measure. Optimization isn't a one-time setup. It requires ongoing checks. Set up a dashboard to track key metrics.

Key Metrics to Watch:

- Cost per Session: The total cost to complete a user intent or task. If this spikes, your agent might be stuck in loops. * Token Ratio: The ratio of input tokens to output tokens. A high input ratio might suggest inefficient context loading. * Tool Usage Costs: Which tools are costing the most? Is the agent reading entire files unnecessarily? Is it calling a search API too often? * Cache Hit Rate: If you are using prompt caching, ensure your hit rate is high (over 80%). If it's low, you might need to reorder your prompt structure.

Budget Alerts

Set up hard and soft limits on your API accounts. * Soft Limit: Send an email when you hit 50% and 80% of your monthly budget. * Hard Limit: Automatically pause processing if you hit 100%. This prevents a "runaway agent" from draining your budget due to a coding bug.

Frequently Asked Questions

How much does it cost to run an AI agent?

Costs vary based on model choice and complexity. Unoptimized production agents can cost $10-$100+ per session due to long context windows and multi-step loops. Using strategies like RAG, model routing, and prompt caching can reduce this spend by over 80%.

What is the cheapest way to run AI agents?

The cheapest approach mixes a tiered model architecture (using small models for simple routing and formatting) with efficient storage like Fast.io's free agent tier. This minimizes compute costs and infrastructure overhead. Using Batch APIs for background tasks also cuts token costs by 50%.

How does RAG reduce LLM token costs?

RAG (Retrieval-Augmented Generation) cuts costs by retrieving only the relevant text chunks to answer a query. It avoids feeding entire documents into the prompt. This shrinks the input context window and costs.

What is prompt caching and when should I use it?

Prompt caching lets LLM providers remember static parts of your prompt (like system instructions or reference texts) so you don't pay to re-process them every time. Use it when you have a long, consistent context reused across many requests, such as a code repository or a detailed rulebook.

Are vector databases necessary for AI agents?

Not always. While powerful, vector databases are expensive to host and manage. For many use cases, a hybrid approach using efficient file storage with metadata search, like Fast.io's Intelligence Mode, is more cost-effective. It allows for semantic search without the overhead of a dedicated vector DB.

Related Resources

Run Optimize Token Costs For AI Agents workflows on Fast.io

Get 50GB of free, high-performance storage for your AI agents with Fast.io. Built-in RAG, 251+ MCP tools, and no credit card required.