Top Tools for RAG Evaluation: Measuring Agent Performance

Retrieval-Augmented Generation (RAG) systems can fail silently, serving confident but incorrect answers. To build reliable agents, you need reliable evaluation pipelines. This guide compares the top tools for RAG evaluation, including Ragas, TruLens, and DeepEval, to help you measure context precision, faithfulness, and answer relevance.

Why RAG Evaluation is Non-Negotiable: tools rag evaluation

Building a RAG pipeline is easy; proving it works is hard. Without rigorous evaluation, you cannot distinguish between a model that knows the answer and one that is hallucinating confidently.

In multiple, RAG-related issues accounted for multiple% of all enterprise AI failures, according to CSO Online. While top models like Gemini multiple.multiple Flash have reduced hallucination rates to as low as multiple.multiple%, specialized domains and complex reasoning tasks still see error rates climb.

Evaluation tools bridge this gap by providing quantitative metrics. They answer two critical questions:

- Retrieval Quality: Did we find the right documents?

- Generation Quality: Did the LLM use those documents to answer correctly?

Implementing these tools early in your development cycle prevents "silent failures" where agents provide plausible but factually incorrect information to users.

Helpful references: Fast.io Workspaces, Fast.io Collaboration, and Fast.io AI.

Core Metrics: What to Measure

Before choosing a tool, you must understand the "RAG Triad" of metrics. Most evaluation frameworks focus on these three pillars to assess agent performance.

1. Context Precision & Recall

- Context Precision: Measures the signal-to-noise ratio in your retrieved chunks. If you retrieve multiple documents but only the 7th one contains the answer, your precision is low.

- Context Recall: Measures if the retrieved chunks contain all the necessary information to answer the user's query.

2. Faithfulness (Groundedness)

This metric assesses whether the generated answer is derived solely from the retrieved context. A faithful answer does not hallucinate information from the model's pre-training data that isn't present in the source documents.

3. Answer Relevance

Even if an answer is faithful, it must be relevant. This metric scores how well the generated response addresses the user's original intent. An answer can be factually true (faithful) but completely unhelpful (irrelevant).

1. Ragas (Retrieval-Augmented Generation Assessment)

Ragas is the industry standard open-source framework for programmatic RAG evaluation. It introduced the "LLM-as-a-judge" approach, where a strong model (like GPT-multiple or Claude multiple.multiple Sonnet) evaluates the outputs of your RAG pipeline.

- Best For: Developers who need a standardized set of metrics (Precision, Recall, Faithfulness, Answer Relevance) to run in CI/CD pipelines.

- Key Features: Deeply integrated with LangChain and LlamaIndex; generates synthetic test data from your own documents to bootstrap evaluation datasets.

- Limitations: Requires an API key for the "judge" LLM, which can incur costs during large-scale testing.

Add one practical example, one implementation constraint, and one measurable outcome so the section is concrete and useful for execution.

2. DeepEval

DeepEval positions itself as the "unit testing" framework for LLMs. If you come from a traditional software engineering background, DeepEval's developer experience will feel familiar. It treats RAG evaluation like regression testing.

- Best For: Teams adding RAG evaluation directly into their CI/CD workflows (GitHub Actions, GitLab CI).

- Key Features: Offers specialized metrics for hallucination, toxicity, and bias; highly modular design allows you to write custom test cases easily.

- Limitations: Setup can be more involved than simple scripts, requiring a testing mindset to use effectively.

Add one practical example, one implementation constraint, and one measurable outcome so the section is concrete and useful for execution.

3. TruLens

TruLens focuses on the "RAG Triad" feedback loop. It is an open-source library that instruments your app to log inputs, outputs, and intermediate steps, then runs "Feedback Functions" to score them.

- Best For: Visualizing the chain of thought and identifying exactly where a pipeline failed (retrieval vs. generation).

- Key Features: The "TruLens Dashboard" provides a visual leaderboard of your experiments, making it easy to compare different chunking strategies or embedding models.

- Limitations: The dashboard is useful for local development but may require additional setup for shared team visibility.

Add one practical example, one implementation constraint, and one measurable outcome so the section is concrete and useful for execution.

4. Arize Phoenix

Arize Phoenix is an observability platform built on OpenTelemetry. While it handles evaluation, its strength is tracing and troubleshooting. It allows you to visualize your embedding clusters to see where your retrieval might be failing for specific topics.

- Best For: Production monitoring and deep-dive troubleshooting of complex retrieval issues.

- Key Features: 3D visualization of embedding spaces; trace-level inspection of every step in the RAG chain; strong integration with LlamaIndex.

- Limitations: Can be overkill for simple RAG apps; steeper learning curve than pure metric libraries.

Add one practical example, one implementation constraint, and one measurable outcome so the section is concrete and useful for execution.

5. LangSmith

LangSmith, from the creators of LangChain, is a complete platform for building, debugging, and testing LLM apps. It offers an all-in-one solution for tracing execution and running evaluations.

- Best For: Teams already heavily invested in the LangChain ecosystem.

- Key Features: "Playgrounds" for rapid prompt iteration; collaborative datasets for team-based evaluation; one-click "Add to Dataset" for interesting production traces.

- Limitations: It is a managed platform (SaaS), which may raise data privacy concerns for some on-premise requirements, though self-hosted options exist for enterprise.

Add one practical example, one implementation constraint, and one measurable outcome so the section is concrete and useful for execution.

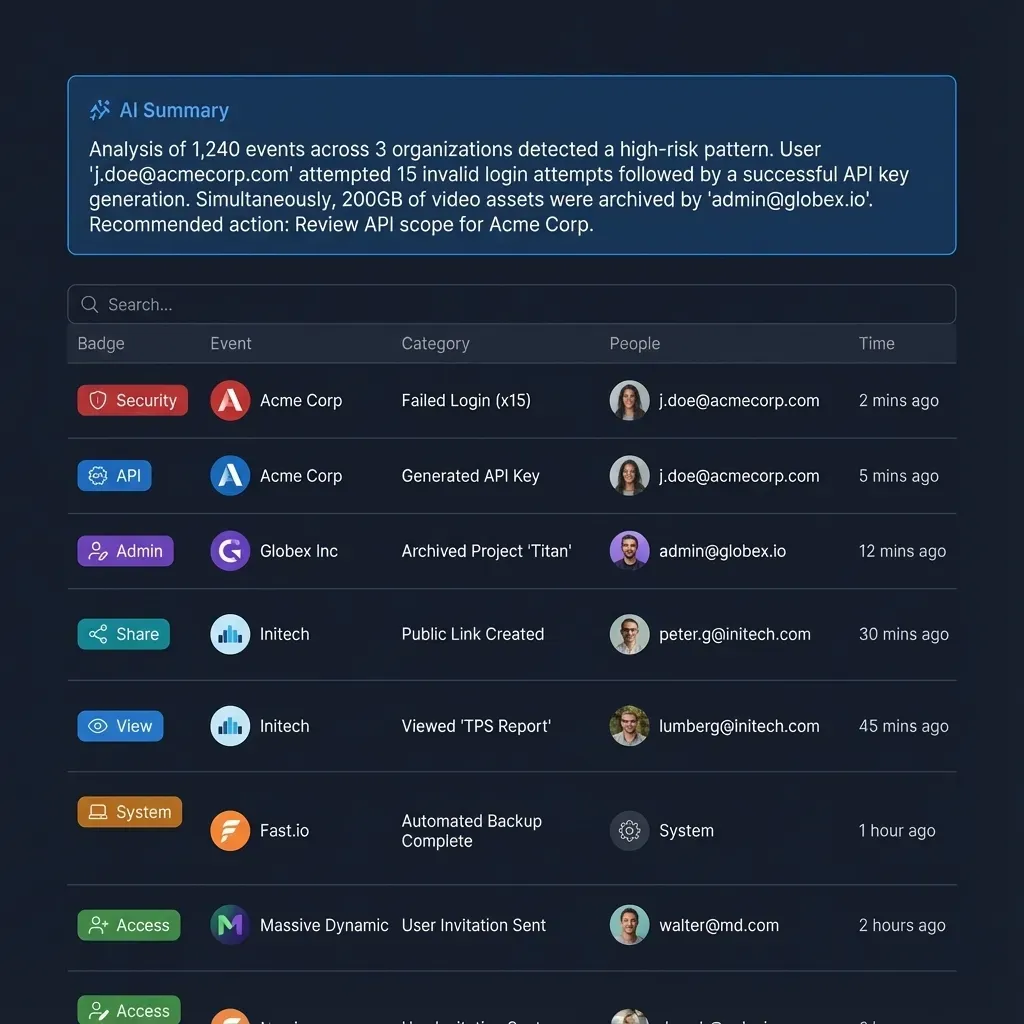

6. Fast.io: The Self-Verifying Workspace

Tools like Ragas and TruLens measure pipeline performance during development.

Fast.io solves the verification problem in production.

Fast.io is an intelligent workspace with Intelligence Mode, a built-in RAG engine that automatically indexes every file you upload. Instead of building and evaluating your own retrieval pipeline, Fast.io provides a pre-optimized, citation-based environment.

- Best For: Teams who need reliable, verifiable answers from their data without maintaining complex RAG infrastructure.

- Key Features:

- Built-in Citations: Every answer includes clickable citations linking directly to the source file and page. This allows humans to instantly verify "Faithfulness" without an algorithm.

- Audit Trails: See exactly which files were accessed and used to generate an answer.

- Zero-Config RAG: No vector database to manage; upload files and toggle Intelligence Mode.

- Verification: Unlike "black box" RAG systems, Fast.io's interface is designed for human-in-the-loop verification, making it the ideal production environment for agentic workflows.

Comparison Summary

Here is how the top tools stack up for different needs:

| Tool | Best For | Key Strength | Type |

|---|---|---|---|

| Ragas | Metrics Calculation | Standardized scores (Precision/Recall) | Library |

| DeepEval | CI/CD Testing | Unit-test developer experience | Library |

| TruLens | Experiment Tracking | Visual dashboard for experiments | Library/Dashboard |

| Arize Phoenix | Troubleshooting | 3D embedding visualization | Platform |

| LangSmith | Full Observability | End-to-end tracing & debugging | SaaS Platform |

| Fast.io | Production Verification | Built-in citations & audit logs | Workspace |

Pro Tip: Use libraries like Ragas or DeepEval during development to optimize your chunking and prompting strategies. Then, use platforms like Fast.io for production to ensure end-users always have verifiable sources for every AI-generated answer.

Frequently Asked Questions

What is the most important RAG metric?

For most applications, **Answer Relevance** and **Faithfulness** are the most critical. Relevance ensures the user gets a helpful answer, while Faithfulness ensures the answer is not a hallucination. High retrieval precision is important but is a means to an end.

Can I evaluate RAG systems without ground truth data?

Yes, using the 'RAG Triad' approach (Context Relevance, Groundedness, Answer Relevance). Tools like Ragas and TruLens use an LLM-as-a-judge to evaluate these metrics based on the query, context, and response, even without a human-labeled 'gold standard' answer.

How does Fast.io handle RAG evaluation?

Fast.io focuses on *verification* rather than abstract evaluation scores. By providing clickable citations for every claim and maintaining a complete audit log of accessed files, Fast.io allows users to verify accuracy in real-time, eliminating the trust gap common in black-box RAG systems.

What is 'LLM-as-a-judge'?

LLM-as-a-judge is a technique where a powerful LLM (like GPT-multiple) is used to evaluate the output of your application. The judge LLM is given the query, context, and generated answer, and asked to score aspects like accuracy or tone based on specific criteria.

Do I need a vector database to evaluate RAG?

You typically need a vector database (like Pinecone or Weaviate) to *run* a RAG system, which you then evaluate. Solutions like Fast.io include built-in vector indexing (Intelligence Mode), removing the need to manage and evaluate a separate vector database component.

Related Resources

Stop Guessing, Start Verifying

Get verified, cited answers from your data instantly. Fast.io's Intelligence Mode indexes your workspace for reliable RAG without the engineering headache. Built for tools rag evaluation workflows.