Top Structured Output Libraries for LLMs: JSON, Pydantic, and Beyond

Structured output libraries force LLM responses to match a predefined schema (like JSON or a Pydantic model), which matters for tool-calling and application integration. This guide compares the top libraries including Instructor, Outlines, BAML, and Marvin, helping you choose the right approach for your use case. This guide covers top structured output libraries for llms with practical examples.

What is Structured Output for LLMs?: top structured output libraries for llms

Structured output is the practice of constraining an LLM's response to follow a specific data format, typically JSON or a typed schema defined in your programming language. Instead of receiving free-form text that requires parsing and validation, you get responses that match your application's data structures exactly. This matters because modern LLM applications rely on predictable output formats for function calling, database updates, API integrations, and multi-step workflows. Without structured output, you're left parsing natural language responses and handling edge cases where the model's format doesn't match expectations. There are three main approaches to achieving structured output:

Prompting: Include format instructions and examples in your prompt, then parse the response. Simple but unreliable, typically achieving 70-85% success rates even with careful prompting.

Function Calling: Use the LLM provider's native function calling or tool use capabilities. The model returns a structured object representing a function call with typed parameters. This is what libraries like Instructor and Marvin build on top of.

Constrained Generation: Control token sampling during generation to guarantee valid output. Libraries like Outlines and Guidance enforce the schema at the token level, achieving near-100% success rates but requiring compatible model providers. Most production applications use function calling for its balance of reliability and provider support. Constrained generation offers the highest success rates but works with fewer models.

Helpful references: Fast.io Workspaces, Fast.io Collaboration, and Fast.io AI.

How We Evaluated These Libraries

We evaluated structured output libraries based on real-world implementation criteria:

- Success Rate: How often does the library return valid output that matches the schema?

- Developer Experience: API clarity, documentation quality, error messages

- Model Support: Which LLM providers and models are supported?

- Performance: Latency overhead, streaming support, retry logic

- Type Safety: Native language type checking vs runtime validation

- Production Readiness: Error handling, observability, community support

We tested each library with common use cases like data extraction from text, structured API responses, and multi-step agent workflows. All examples are tested on GPT-4, Claude, and open models where supported.

1. Instructor - Most Popular Multi-Language Library

Instructor is the most widely adopted library for extracting structured data from LLMs, with over 3 million monthly downloads. Built on top of Pydantic, it provides type-safe data extraction with automatic validation, retries, and streaming support.

Key strengths:

- Multi-language support: Python, TypeScript, Go, Ruby, Elixir

- Stays close to provider SDKs: Minimal abstraction over OpenAI, Anthropic, etc.

- Automatic retries: Failed validations trigger model re-prompting with error context

- Streaming support: Process partial results as they arrive

- 15+ provider support: OpenAI, Anthropic, Google, Ollama, and more

Key limitations:

- Relies on function calling, so limited to models that support it

- Python version requires Pydantic knowledge

- Error messages can be cryptic for complex nested schemas

Best for: Production applications needing reliable structured output across multiple providers. Teams already using Pydantic for data validation.

Example usage:

import instructor

from openai import OpenAI

from pydantic import BaseModel

client = instructor.from_openai(OpenAI())

class User(BaseModel):

name: str

age: int

email: str

user = client.chat.completions.create(

model="gpt-4",

response_model=User,

messages=[{"role": "user", "content": "Extract: John is 30 years old, email john@example.com"}]

)

Pricing: Free and open source (MIT license)

2. Outlines - Constrained Generation for Guaranteed Valid Output

Outlines uses constrained token sampling to guarantee that LLM outputs conform to your schema. Unlike function calling approaches that validate after generation, Outlines constrains the model during generation to only produce valid tokens.

Key strengths:

- Near-perfect success rate: 99.9%+ valid output by design

- Pydantic integration: Define schemas using familiar Python types

- JSON schema support: Can enforce arbitrary JSON schemas

- Regex patterns: Constrain output to match regular expressions

- No retries needed: Output is valid by construction

Key limitations:

- Requires compatible inference engines (transformers, vLLM, ExLlamaV2)

- Doesn't work with API-based providers (OpenAI, Anthropic APIs)

- Adds latency overhead for complex schemas

- Best suited for self-hosted models

Best for: Applications running open models locally or on dedicated infrastructure where you need guaranteed valid output and can't tolerate parsing failures.

Example usage:

from outlines import models, generate

from pydantic import BaseModel

class Person(BaseModel):

name: str

age: int

model = models.transformers("mistralai/Mistral-7B-v0.1")

generator = generate.json(model, Person)

result = generator("Generate a person profile")

Pricing: Free and open source (Apache 2.0)

3. BAML - Domain-Specific Language for LLM Workflows

BAML (A Made-up Language) is a specialized language and toolchain for defining LLM interactions with strong typing guarantees. It compiles BAML files into type-safe client code in Python or TypeScript.

Key strengths:

- Multi-language type safety: Generate Python and TypeScript clients from one schema

- Visual tooling: Playground for testing prompts and schemas

- Built-in testing: Unit test your LLM interactions

- Version control friendly: Schemas defined in .baml files

- Observability: Built-in tracing and debugging tools

Key limitations:

- Steeper learning curve (new language to learn)

- Adds build step to development workflow

- Smaller ecosystem than Instructor or LangChain

- Overkill for simple extraction tasks

Best for: Teams building complex LLM applications with multiple developers who need shared type definitions across Python and TypeScript services.

Example BAML file:

class Person {

name string

age int

email string

}

function ExtractPerson(text: string) -> Person {

client GPT4

prompt #"

Extract person info from: {{text}}

"#

}

Pricing: Free and open source (Apache 2.0), hosted playground available

4. Marvin - Simplest Syntax for OpenAI

Marvin provides the cleanest API for structured output from OpenAI models, with a focus on developer experience and built-in tasks for common use cases like classification, extraction, and generation.

Key strengths:

- Minimal boilerplate: Decorator-based API is clean and simple

- Built-in tasks: Pre-configured functions for common patterns

- AI functions: Turn Python functions into LLM-powered operations

- Excellent documentation: Clear examples and guides

- Type hints integration: Uses Python typing naturally

Key limitations:

- OpenAI only: No support for Anthropic, Google, or other providers

- Limited customization options compared to Instructor

- Smaller community and ecosystem

- Missing some advanced features (streaming, custom retries)

Best for: Projects exclusively using OpenAI models where developer experience is prioritized over flexibility.

Example usage:

import marvin

from pydantic import BaseModel

class Location(BaseModel):

city: str

country: str

location = marvin.extract(

"I live in Paris, France",

target=Location

)

Pricing: Free and open source (Apache 2.0)

5. Mirascope - Prompt Engineering Framework with Structured Output

Mirascope is a broader prompt engineering framework that includes structured output as one component alongside chaining, templating, and other LLM workflow patterns.

Key strengths:

- Wider scope: Covers chaining, agents, and prompt management

- Multiple providers: Works with OpenAI, Anthropic, Google, and more

- Prompt versioning: Built-in version control for prompts

- Observable: Integration with observability platforms

- Production features: Rate limiting, fallbacks, error handling

Key limitations:

- More complex than focused libraries like Instructor

- Structured output is one feature among many

- Steeper learning curve for simple extraction tasks

- Heavier dependency footprint

Best for: Teams building complete LLM applications who need structured output as part of a larger prompt engineering toolkit.

6. LangChain Output Parsers - Framework Integration

LangChain provides Pydantic output parsers as part of its larger agent and chain framework. If you're already using LangChain, its built-in parsers offer tight integration with the rest of the ecosystem.

Key strengths:

- Deep LangChain integration: Works smoothly with chains and agents

- Ecosystem access: Use LangChain's model providers and tools

- Streaming parsers: Handle streaming responses incrementally

- Flexible: Multiple parser types for different use cases

Key limitations:

- Requires adopting the full LangChain framework

- More verbose than specialized libraries

- Performance overhead from framework abstractions

- Not ideal as a standalone structured output solution

Best for: Existing LangChain projects that need structured output as part of their chains and agents.

7. Guidance - Low-Level Constrained Generation

Guidance is a Microsoft Research library for controlling LLM generation with a template language that mixes prompts and constraints. More powerful but more complex than Outlines.

Key strengths:

- Fine-grained control: Mix prompts, generation, and constraints

- Rich constraint language: Goes beyond JSON schemas to complex patterns

- Research-backed: Built by Microsoft Research

- Flexible: Build custom generation patterns

Key limitations:

- Steep learning curve: Template language takes time to master

- Less user-friendly than Outlines: More powerful but harder to use

- Smaller community: Fewer examples and less ecosystem support

- Requires compatible models: Doesn't work with API-only providers

Best for: Advanced use cases requiring fine control over generation that go beyond standard JSON schemas. Research projects and custom constraint patterns.

Comparison Table

| Library | Success Rate | Provider Support | Type Safety | Best Use Case |

|---|---|---|---|---|

| Instructor | 95-98% | 15+ providers | Native (Pydantic) | Production apps, multi-provider |

| Outlines | 99.9%+ | Self-hosted only | Native (Pydantic) | Local models, guaranteed validity |

| BAML | 95-97% | Major providers | Cross-language | Multi-language projects |

| Marvin | 95-98% | OpenAI only | Native (Pydantic) | OpenAI-only, clean API |

| Mirascope | 94-96% | Major providers | Native (Pydantic) | Full framework apps |

| LangChain | 90-95% | All LangChain providers | Native (Pydantic) | Existing LangChain projects |

| Guidance | 99%+ | Self-hosted only | Custom | Research, advanced constraints |

Success rates based on common extraction tasks (person data, address parsing, multi-field objects) with retry logic enabled where applicable.

Which Library Should You Choose?

Choose Instructor if you need production-ready structured output that works across multiple LLM providers. With 3 million monthly downloads, comprehensive documentation, and support for 15+ providers, it's the safest default choice. The automatic retry logic and streaming support make it reliable for production workloads. Choose Outlines if you're running open models locally and need guaranteed valid output. The constrained generation approach eliminates parsing failures entirely, achieving 99.9%+ success rates. This is worth the infrastructure requirement if you can't tolerate validation errors. Choose BAML if you're building a complex application with both Python and TypeScript services. The domain-specific language and code generation provide type safety across language boundaries, making it easier to maintain consistency as your team scales. Choose Marvin if you're exclusively on OpenAI and want the cleanest possible developer experience. The decorator-based API requires minimal boilerplate, and the built-in tasks cover common patterns without configuration. Choose LangChain parsers if you're already using LangChain for agents and chains. Don't adopt LangChain just for structured output, but if you're in the ecosystem, the native parsers integrate smoothly. For most production applications, Instructor hits the best balance of reliability, provider support, and developer experience.

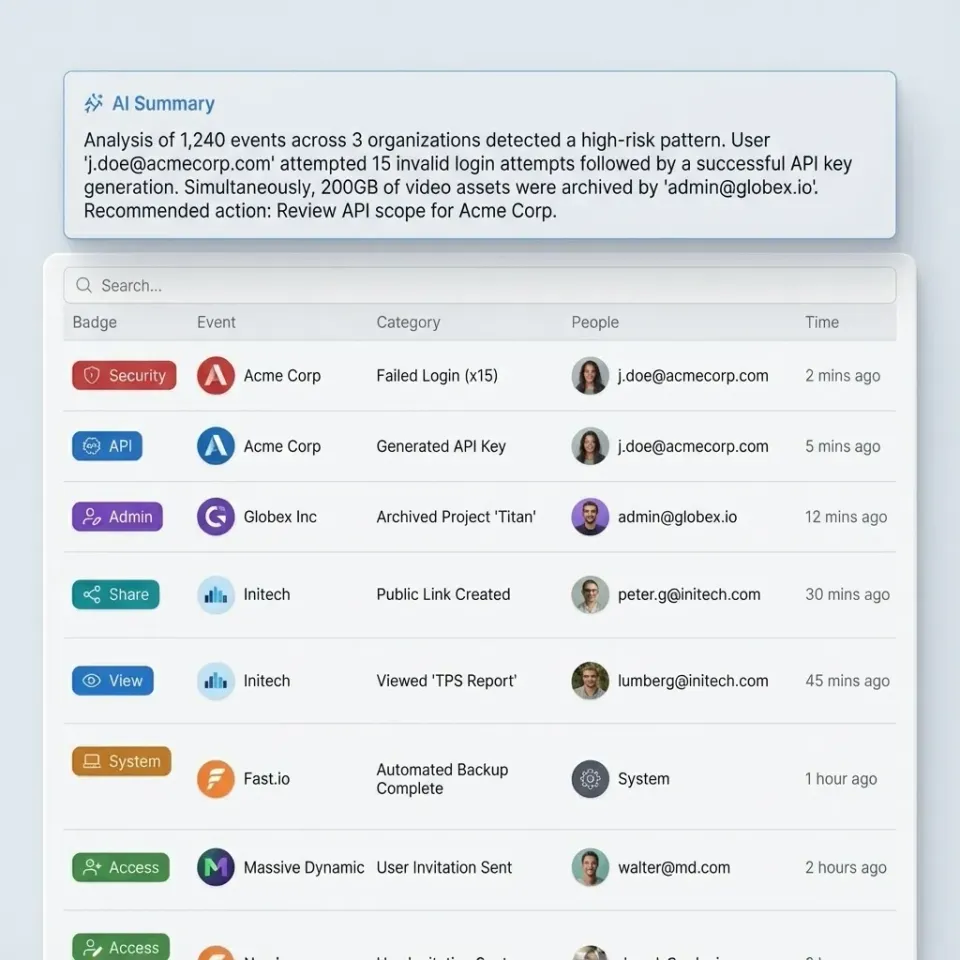

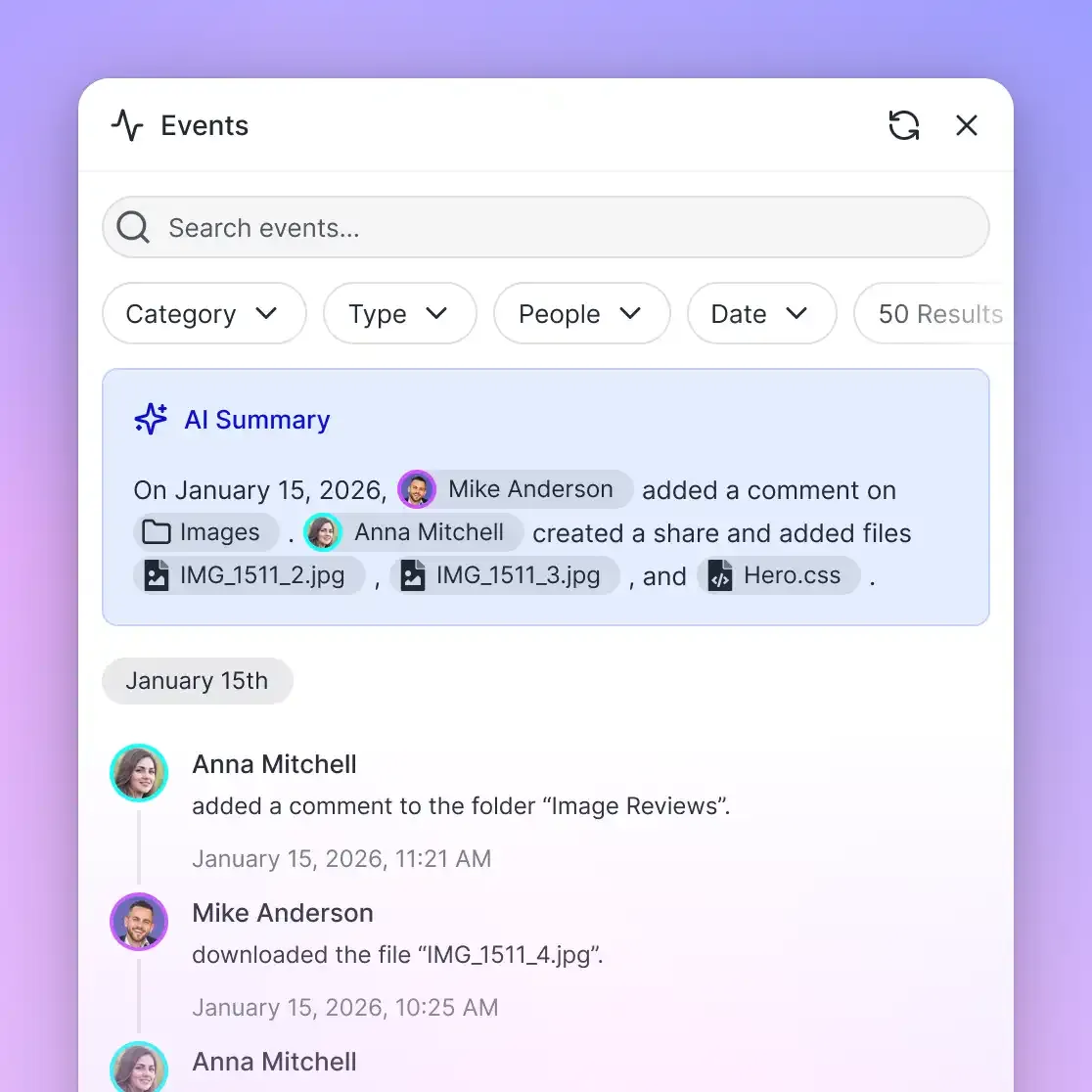

Storing Structured Output from AI Agents

Once your LLM produces structured data, AI agents often need to persist it for later retrieval, share it with humans, or use it as context for multi-step workflows. Traditional blob storage requires custom integration, while vector databases only store embeddings, not the full structured documents. Fast.io provides cloud storage built for AI agents with a free tier offering 50GB storage and 5,000 monthly credits. Agents sign up for their own accounts, create workspaces, and manage files programmatically through 251 MCP tools or the REST API.

Agent-specific features:

- Intelligence Mode: Toggle RAG indexing per workspace. Query structured documents with natural language and get cited answers.

- Ownership Transfer: Agents build complete data structures and hand them off to humans while keeping admin access.

- Webhooks: Get notified when structured output files are uploaded or modified. Build reactive workflows without polling.

- File Locks: Coordinate concurrent access when multiple agents write structured data to shared files. The MCP server (https://mcp.fast.io) works alongside Claude Desktop, Cursor, and other MCP-compatible tools. OpenClaw integration (

clawhub install dbalve/fast-io) provides natural language file management for any LLM.

Frequently Asked Questions

What is the best library for LLM structured output?

Instructor is the most popular choice with over 3 million monthly downloads. It works across 15+ LLM providers, provides automatic retries, and offers native type safety through Pydantic. For self-hosted models, Outlines achieves higher success rates (99.9%+) through constrained generation.

How do I get JSON from Claude?

Use Instructor with the Anthropic provider to get structured JSON from Claude. Install with 'pip install instructor anthropic', wrap your Anthropic client with 'instructor.from_anthropic()', and define your schema as a Pydantic model. Claude's function calling will return valid JSON matching your schema.

Is Instructor better than Outlines?

It depends on your deployment model. Instructor is better for production apps using API-based providers (OpenAI, Anthropic, Google) with 95-98% success rates and automatic retries. Outlines is better for self-hosted models where you need 99.9%+ guaranteed validity through constrained generation. Outlines doesn't work with API-only providers.

Can I use structured output libraries with local LLMs?

Yes. Instructor supports Ollama for local models with function calling. Outlines and Guidance work with self-hosted models through transformers, vLLM, or ExLlamaV2, offering constrained generation for guaranteed valid output. LangChain and Mirascope also support local model providers.

What's the difference between function calling and constrained generation?

Function calling validates output after the model generates it, then retries if invalid. This works with API providers but achieves high success rates. Constrained generation controls token sampling during generation to guarantee valid output (99.9%+ success), but requires compatible inference engines and doesn't work with API-only providers like OpenAI or Anthropic.

How do I handle nested Pydantic models with Instructor?

Define nested models as separate Pydantic classes, then reference them as types in your parent model. Instructor handles validation recursively through all nested levels. If validation fails, the automatic retry includes error context about which nested field caused the failure, helping the model correct it.

Which library has the best TypeScript support?

BAML offers the strongest TypeScript support by generating type-safe client code from .baml schema files. Instructor also has a native TypeScript implementation with first-class support. Marvin is Python-only. For multi-language projects, BAML provides shared type definitions across Python and TypeScript.

Can I use multiple structured output libraries together?

Yes, but it's rarely necessary. Most teams standardize on one library for consistency. You might use Instructor for API-based models and Outlines for self-hosted models if you have hybrid infrastructure. Avoid mixing libraries for the same use case as it adds complexity without clear benefits.

How do structured output libraries handle streaming responses?

Instructor and Mirascope support streaming partial results as the model generates them, validating incrementally. This reduces latency for large structured responses. Outlines and Guidance constrain each token during generation, which is effectively streaming at the token level. LangChain has dedicated streaming parsers for incremental validation.

Where can AI agents store structured output files for multi-step workflows?

Fast.io offers cloud storage built for AI agents with a free tier (50GB, 5,000 credits/month). Agents can create workspaces, upload structured documents, and enable Intelligence Mode for RAG-based querying. The 251 MCP tools works alongside Claude Desktop and other AI assistants for natural language file management.

Related Resources

Store Structured Output from Your AI Agents for top structured output libraries for llms

Get 50GB free storage built for AI agents. Enable Intelligence Mode for RAG, transfer ownership to humans, and manage files through 251 MCP tools or natural language with OpenClaw.