Top Prompt Management Tools for Developers in 2026

Guide to prompt management tools developers: Managing prompts in production requires more than a spreadsheet. Explore the top tools for versioning, testing, and deploying prompts like code.

Why Developers Need Prompt Management Tools

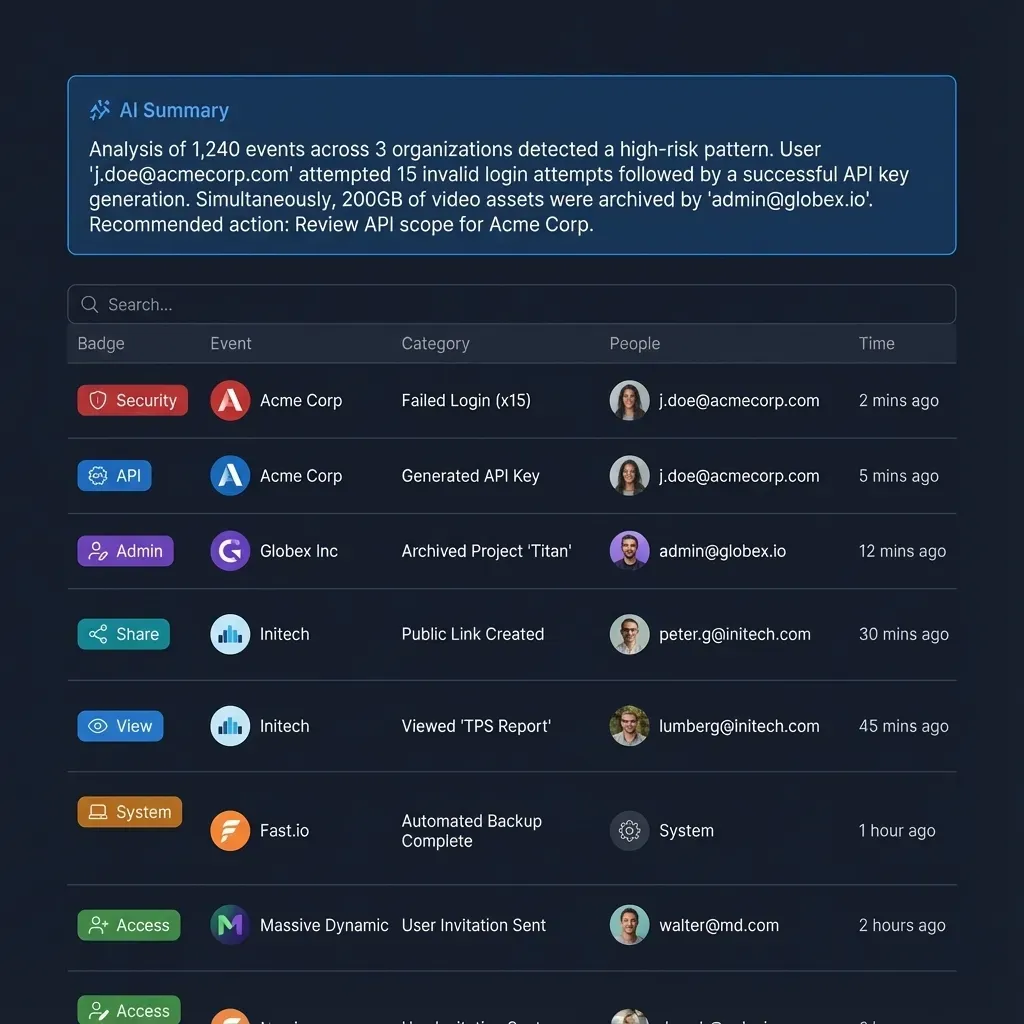

As AI engineering grows, the "copy-paste from ChatGPT" workflow is no longer sufficient for production applications. Engineering teams need to treat prompts with the same rigor as software code, versioning them, testing them against datasets, and monitoring their performance in the wild. Prompt management tools solve the chaos of "final_final_v3.txt" by providing a centralized registry for all your AI interactions. These platforms allow developers to track changes, collaborate with non-technical stakeholders, and evaluate model outputs systematically. According to Latitude, implementing prompt version control can cut debugging and prompt management time by multiple-multiple%. In this guide, we evaluate the top prompt management tools available in multiple, focusing on their developer experience, versioning capabilities, and integration ease.

Helpful references: Fast.io Workspaces, Fast.io Collaboration, and Fast.io AI.

1. PromptLayer: The Visual CMS for Prompts

PromptLayer was one of the first platforms to popularize the concept of a "CMS for prompts." It acts as a middleware between your code and the LLM API, capturing every request and response to build a complete history of your AI interactions.

Its visual interface allows product managers and domain experts to edit prompts without touching the codebase. Developers can then pull the latest version of a prompt via the SDK, decoupling prompt engineering from application deployment.

Key Strengths:

- Visual Registry: Excellent UI for non-technical team members to iterate on prompts.

- Middleware SDK: distinct "drop-in" replacement for OpenAI and Anthropic clients.

- Rich Analytics: detailed cost tracking and latency monitoring per tag.

Limitations:

- Proxy Dependency: Relies on their proxy or SDK wrapper, which can introduce a point of failure.

- Cost Scaling: Can become expensive for high-volume applications on team plans.

Best For: Teams that want to help non-technical members manage prompts.

Pricing: Free tier (multiple.multiple requests/mo), Pro ($multiple/mo), Team ($multiple/mo).

2. Helicone: Open-Source Observability Powerhouse

Helicone started as a lightweight proxy for OpenAI caching but has grown into a complete observability and prompt management platform. It is fully open-source, meaning you can self-host it to keep your data entirely within your infrastructure, a key requirement for many enterprise teams. Helicone excels at visibility. It provides detailed insights into exactly what your users are sending to your models and how the models are responding. Its "Prompt ID" system allows you to track versions and A/B test different prompts in production.

Key Strengths:

- Open Source: Fully self-hostable via Docker, ensuring data sovereignty.

- Caching: Built-in caching can reduce LLM API costs.

- Low Latency: designed to add minimal overhead to your API calls.

Limitations:

- UI Complexity: The wealth of data can be overwhelming for beginners.

- Self-Hosting Setup: Requires DevOps effort to manage and scale the self-hosted version.

Best For: Engineering teams who prioritize data privacy and observability.

Pricing: Free tier (multiple requests/mo), Pro ($multiple/mo), Team ($multiple/mo).

3. Langfuse: Open Source Tracing & Evaluation

Langfuse is another powerful open-source contender that focuses heavily on "tracing", visualizing the entire chain of execution for complex LLM applications. If you are building agents or RAG pipelines where a single user action triggers multiple model calls, Langfuse's trace view is essential. It combines prompt management with this tracing capability, allowing you to link a specific prompt version to its real-world performance in complex chains. It integrates smoothly with LangChain and LlamaIndex but works with any SDK.

Key Strengths:

- Deep Tracing: Top-tier visualization of multi-step chains and RAG pipelines.

- Dataset Evaluation: Run automated evaluations on your historical data.

- Developer Experience: excellent SDKs and documentation.

Limitations:

- Learning Curve: Concepts like "traces" and "spans" require understanding distributed tracing.

- Younger Ecosystem: Fewer third-party integrations compared to older tools.

Best For: Developers building complex agents or RAG pipelines.

Pricing: Hobby (Free), Pro (Usage-based, starts at $multiple, then ~$multiple/multiple units).

4. Agenta: The Developer-First LLMOps Platform

Agenta takes a "developer-first" approach to prompt engineering. It allows you to build, test, and evaluate LLM apps directly from code. Unlike tools that try to hide the complexity, Agenta embraces it, offering a playground that feels like an IDE. It supports "variants", allowing you to test different combinations of prompts, models, and parameters side-by-side. Its evaluation framework is particularly strong, letting you define custom logic to grade model outputs automatically.

Key Strengths:

- Comparison View: Best interface for side-by-side comparison of prompt outputs.

- Code-First Evaluation: Define evaluation logic in Python code.

- Open Source: Available to self-host.

Limitations:

- Setup: Requires more initial configuration than cloud-only tools.

- Community Size: Smaller community than LangChain-based tools.

Best For: Engineers who want rigorous, code-based evaluation workflows.

Pricing: Free (multiple users), Starter ($multiple/mo), Scale ($multiple/mo).

5. Humanloop: High-Quality Feedback Loops

Humanloop focuses on the "human" aspect of RLHF (Reinforcement Learning from Human Feedback). It provides a polished interface for subject matter experts to review model outputs and provide corrections, which can then be used to fine-tune models or improve prompts.

It is an enterprise-grade platform used by major companies to ensure their AI products meet high quality standards. It unifies prompt management, evaluation, and fine-tuning data in one place.

Key Strengths:

- Feedback Collection: specialized tools for gathering and managing human feedback.

- Fine-Tuning Integration: workflows to easily turn feedback into training data.

- Enterprise Polish: SOC-multiple Type II compliant with strong access controls.

Limitations:

- Cost: tailored for enterprise budgets; less accessible for individual devs.

- Focus: less focused on low-level tracing than tools like Langfuse.

Best For: Enterprise teams scaling from prototype to production with strict quality requirements.

Pricing: Free Trial available, custom Enterprise pricing.

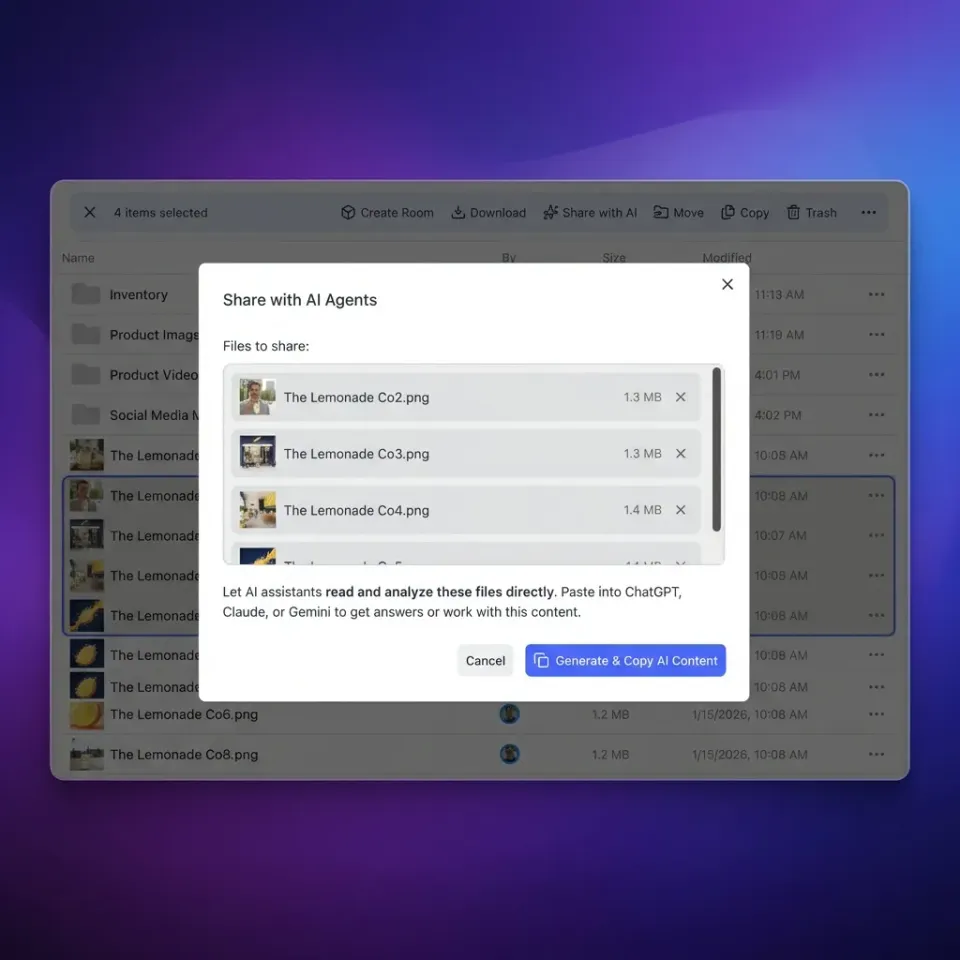

6. Fast.io: The Workspace for AI Agents

While other tools on this list focus on managing the text of prompts, Fast.io provides the environment where your agents actually live and work. It solves the "stateless" problem of AI agents by giving them a persistent file system and a suite of tools to interact with the world.

Fast.io acts as a long-term memory and tool layer for your agents. You can use any prompt management tool (like PromptLayer or Helicone) to optimize your prompt text, but deploy your agent with Fast.io to give it the ability to read, write, and remember.

Key Strengths:

- Persistent Storage: Agents can read and write files that persist across sessions.

- multiple MCP Tools: Instant access to file manipulation, search, and more via Model Context Protocol.

- Intelligence Mode: Automatically indexes all files for RAG, allowing agents to "chat with" your data without setting up a vector database.

- Universal Compatibility: Works with Claude, OpenAI, or any agent framework via the MCP server.

Limitations:

- Different Scope: Not a prompt versioning tool itself; it complements them.

- MCP Focus: Best used with agents that support the Model Context Protocol.

Best For: Developers building autonomous agents that need state, memory, and tools.

Pricing: Free tier (multiple storage, multiple monthly credits), Pro ($multiple/mo).

7. Pezzo: Cloud-Native Prompt Management

Pezzo is a cloud-native, open-source platform designed to simplify the prompt design and deployment process. It offers a GraphQL-based interface and a centralized dashboard to manage all your prompts in one place.

Pezzo aims to be lightweight and easy to integrate, reducing the friction of adding prompt management to an existing stack. It provides immediate visibility into cost and token usage.

Key Strengths:

- GraphQL API: Modern, type-safe integration for frontend and backend.

- Lightweight: Minimal bloat, focuses on the essentials.

- Cost Monitoring: Simple, clear views of token consumption.

Limitations:

- Feature Set: Less comprehensive than heavyweights like Langfuse or Helicone.

- Ecosystem: Smaller library of integrations.

Best For: Full-stack developers who want a modern, API-driven prompt manager.

Pricing: Free tier, Pro ($multiple/mo).

Comparison: Top Prompt Management Tools

Here is a quick comparison to help you decide which tool fits your stack.

| Tool | Best For | Open Source?

| Key Pricing | |------|----------|--------------|-------------| | PromptLayer | Non-technical collaboration | No | Free / $49/mo | | Helicone | Observability & Caching | Yes | Free / $79/mo | | Langfuse | Tracing & RAG | Yes | Free / Usage-based | | Agenta | Code-first evaluation | Yes | Free / $49/mo | | Humanloop | Enterprise & RLHF | No | Custom Enterprise | | Fast.io | Agent Memory & Tools | No | Free / $12/mo | | Pezzo | GraphQL integration | Yes | Free / $99/mo |

Pro Tip: Most teams benefit from combining tools. For example, use PromptLayer for prompt editing and Fast.io to give your agent the persistent storage and tools it needs to execute those prompts effectively.

How to Choose the Right Tool

Selecting the right prompt management tool depends on your team's specific bottlenecks.

Choose PromptLayer or Humanloop if: You have non-technical domain experts (PMs, lawyers, doctors) who need to write and edit prompts. The visual interfaces of these tools are designed for collaboration.

Choose Helicone or Langfuse if: You are an engineering-heavy team building complex chains. The deep tracing and observability features will help you debug issues that a simple text editor can't show you.

Choose Fast.io if: You are building autonomous agents. Your primary challenge isn't just the prompt text, but giving the agent a place to store its work and tools to execute tasks. Fast.io solves the "execution environment" problem.

Frequently Asked Questions

What is prompt management?

Prompt management is the practice of versioning, testing, and deploying AI prompts like software code. It allows teams to track changes, collaborate on prompt design, and make sure that AI models produce consistent, high-quality outputs in production.

Why do I need a prompt management tool?

Without a tool, prompts are often buried in code or scattered across spreadsheets. A management tool provides a single source of truth, enables non-developers to update prompts without deploying code, and allows for systematic testing against datasets to prevent regressions.

Can I use Git for prompt management?

Yes, you can store prompt files in Git. However, specialized tools offer features Git cannot, such as visual diffs of outputs, A/B testing against live models, and cost tracking. Many teams sync their prompt management tool with Git for the best of both worlds.

What is the Model Context Protocol (MCP)?

MCP is an open standard that allows AI models to connect to external data and tools. Fast.io uses MCP to give agents safe, controlled access to your file system and multiple+ other tools, effectively giving your agent 'hands' to do work.

Related Resources

Give Your Agents a Place to Work

Stop building stateless bots. Give your AI agents persistent memory, 251 tools, and a secure workspace with Fast.io. Built for prompt management tools developers workflows.