How to Share Files Between Multiple AI Agents

Multi-agent file access lets AI agents read, write, and share files through a centralized storage system with proper access controls. This guide covers the architecture, common challenges like file conflicts and race conditions, and best practices for reliable multi-agent file sharing in production systems. This guide covers multi agent file access with practical examples.

What Is Multi-Agent File Access?: multi agent file access

Multi-agent file access is how multiple autonomous AI agents read, write, and collaborate on files through a shared storage system. Instead of passing files directly between agents (which creates coupling and state management issues), agents interact with a centralized file store that provides coordination, versioning, and access control. In practice, one agent uploads a file to shared storage and passes the file identifier (not the file itself) to the next agent, which retrieves it. This approach lets agents work asynchronously, handle large files efficiently, and maintain a single source of truth for file state. Multi-agent systems process more data than single agents, making solid file handling the foundation of reliable agentic workflows.

Helpful references: Fast.io Workspaces, Fast.io Collaboration, and Fast.io AI.

Why Multi-Agent Systems Need Shared File Storage

As AI systems evolve from single-agent chatbots to multi-agent workflows, file handling becomes a critical bottleneck. AI teams frequently report file handoff as a top bottleneck in their systems.

Passing files between agents doesn't scale. Early multi-agent systems tried serializing files in API payloads, embedding them in context windows, or storing them in message queues. All three approaches fail at scale:

- API payloads: Limited by size restrictions (1-10MB typical), slow to transfer, no versioning

- Context windows: Consume valuable token budget, can't handle binary formats, disappear when context clears

- Message queues: Not designed for large objects, introduce coupling between agents

Shared storage solves coordination problems. When agents access files through a centralized system, you get:

- Single source of truth: No confusion about which agent has the latest version

- Asynchronous workflows: Agents don't block waiting for file transfers

- Better large file handling: Stream files without loading into memory

- Audit trails: Track which agent accessed or modified which file

- Access control: Restrict which agents can read or write specific files

Common Multi-Agent File Access Patterns

Real-world multi-agent systems use these proven patterns for file collaboration. Consider how this fits into your broader workflow and what matters most for your team. The right choice depends on your specific requirements: file types, team size, security needs, and how you collaborate with external partners. Testing with a free account is the fast way to know if a tool works for you.

Consider how this fits into your broader workflow and what matters most for your team. The right choice depends on your specific requirements: file types, team size, security needs, and how you collaborate with external partners. Testing with a free account is the fast way to know if a tool works for you.

Sequential Pipeline Pattern

Agents process files in a defined order, each adding value before passing to the next agent.

How it works: Agent A uploads a file, stores the file ID in a task queue, and marks the task "ready". Agent B polls the queue, downloads the file, processes it, uploads the result as a new file, and passes that file ID to Agent C.

Use cases:

- Document processing (OCR → extraction → summarization → classification)

- Media workflows (upload → transcode → thumbnail → metadata extraction)

- Data pipelines (ingest → validate → transform → load)

Fast.io implementation: Each agent creates files in a shared workspace. Use webhooks to trigger downstream agents when files are added instead of polling.

Concurrent Read Pattern

Multiple agents read the same source file simultaneously to perform different analyses.

How it works: Agent A uploads a file and notifies multiple downstream agents. Agents B, C, and D all download the same file and process it independently, writing their results to separate output files.

Use cases:

- Multi-model analysis (run GPT-4, Claude, and Gemini on the same document)

- Parallel feature extraction (extract text, images, metadata, and structure simultaneously)

- A/B testing (compare outputs from different agent versions)

Fast.io implementation: Share a read-only file with multiple agents. Each agent writes results to their own workspace to avoid write conflicts.

Collaborative Editing Pattern

Multiple agents contribute to building or refining the same file over time.

How it works: Agents acquire a file lock before modifying a shared file, make their edits, and release the lock. Other agents wait for the lock to become available before proceeding.

Use cases:

- Research report compilation (multiple agents contribute sections)

- Code review (multiple agents suggest improvements to the same file)

- Collaborative summarization (agents refine summaries iteratively)

Fast.io implementation: Use file locks to prevent concurrent modifications. Fast.io provides lock acquisition and release endpoints to coordinate agent access.

Producer-Consumer Pattern

One agent continuously generates files while multiple consumer agents process them as they arrive.

How it works: A producer agent uploads files to a shared folder. Consumer agents subscribe to file upload events via webhooks and process new files as they appear.

Use cases:

- Real-time data ingestion (scraper agent feeds processing agents)

- Batch job distribution (one agent generates tasks, workers claim and process them)

- Event-driven workflows (file arrival triggers downstream processing)

Fast.io implementation: Producer writes to a workspace, consumers listen for webhook events. Fast.io sends notifications when files are added to watched folders.

File Conflicts and Race Conditions

File conflicts cause 40% of multi-agent failures. Understanding and preventing them is essential for production systems.

Lost updates: Two agents read the same file, modify it independently, and both write back. The second write overwrites the first agent's changes without merging them.

Solution: Use file locks or compare-and-swap operations. Fast.io file locks let agents acquire exclusive write access, preventing concurrent modifications. Only the lock holder can write; other agents wait or fail gracefully.

Read-modify-write race: Agent A reads a file, Agent B reads the same file, Agent A writes changes, Agent B writes changes based on stale data. Agent A's work is lost.

Solution: Implement optimistic locking with version checks. Before writing, verify the file version matches what you read. If the version changed, re-read and retry.

Directory listing races: Agent A lists files in a folder, Agent B adds a new file, Agent A processes the list it retrieved (which is now incomplete).

Solution: Use webhook notifications instead of polling directory listings. Fast.io webhooks notify agents immediately when files are added, ensuring no files are missed.

Partial write visibility: Agent A starts uploading a large file, Agent B sees the file appear in the directory listing before the upload completes, and tries to process an incomplete file.

Solution: Use atomic uploads or write to a temporary location and move the file when complete. Fast.io chunked uploads are atomic - files appear only after the final chunk is committed.

File Locking Strategies for Multi-Agent Systems

File locks are the primary mechanism for preventing concurrent write conflicts. Choose the right locking strategy for your workflow.

Pessimistic locking (acquire before reading): Agent acquires a lock before reading the file, performs work, writes changes, and releases the lock. Other agents cannot read or write while the lock is held.

When to use: Short operations where you want guaranteed exclusive access. Works well for quick edits or when read-modify-write must be atomic.

Drawback: Blocks all other agents, even readers. Can create bottlenecks if agents hold locks for long periods.

Optimistic locking (check before writing): Agent reads the file without locking, performs work, and attempts to acquire a lock only when writing. If another agent modified the file in the meantime, retry with fresh data.

When to use: Long-running operations where holding a lock the entire time would block too many agents. Works well for independent transformations that can be safely retried.

Drawback: Requires retry logic and may waste computation if conflicts are frequent.

Shared/exclusive locks (readers-writer pattern): Multiple agents can hold read locks simultaneously, but write locks are exclusive. Acquiring a write lock waits for all read locks to release.

When to use: Workloads with many readers and occasional writers. Maximizes concurrency while preventing write conflicts.

Drawback: More complex to implement correctly. Fast.io file locks support this pattern natively.

Lock timeouts and deadlock prevention: Always set timeouts on lock acquisition. If an agent crashes while holding a lock, the timeout ensures the lock is automatically released. Use a consistent lock ordering if agents need to acquire multiple locks. Fast.io's file locking API handles timeouts automatically and provides lock status endpoints so agents can check if a lock is available before waiting.

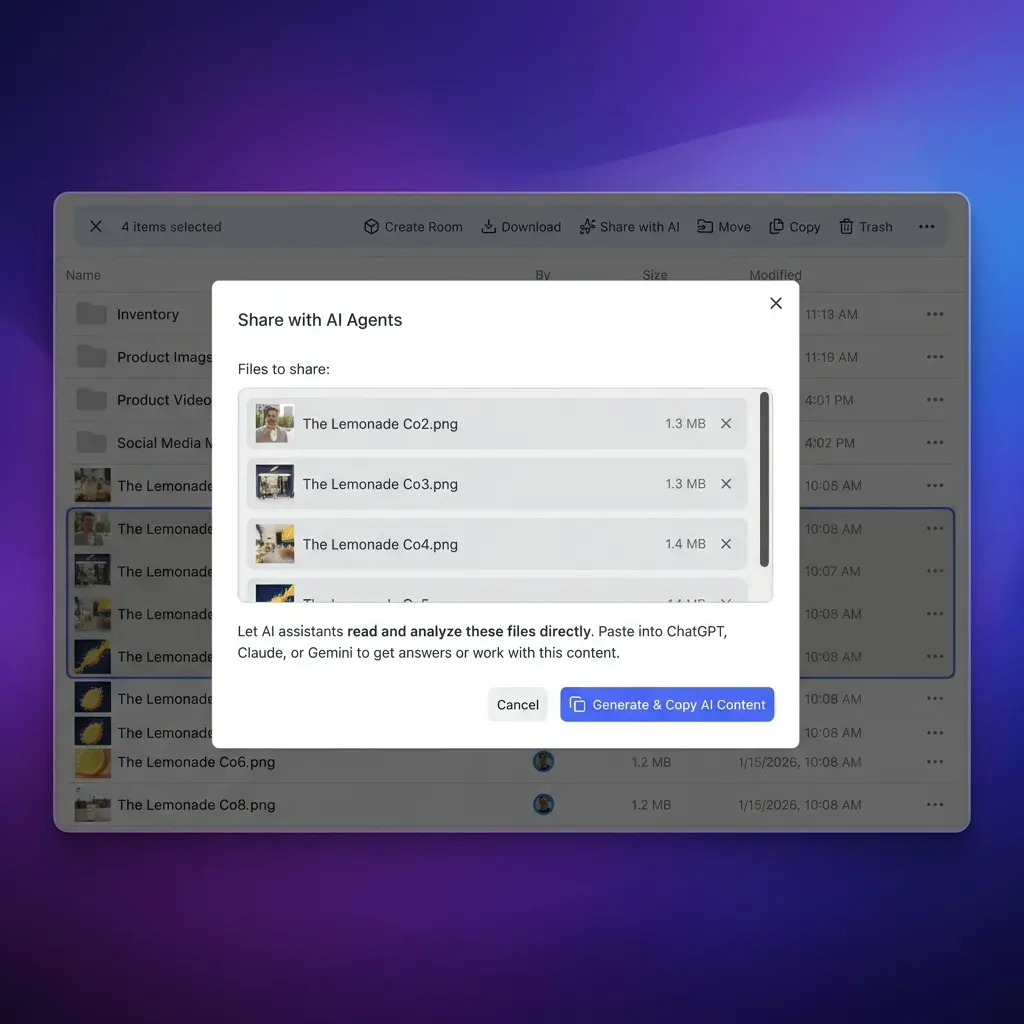

How Fast.io Handles Multi-Agent File Access

Fast.io provides the infrastructure multi-agent systems need for reliable file sharing, with features built for AI agents.

Agent workspaces: Each agent can create and manage workspaces just like human users. A workspace is a shared folder with permissions, activity tracking, and optional AI indexing. Agents organize files by project, client, or workflow stage.

File locks: Acquire and release file locks through the API to prevent concurrent write conflicts. Locks support read/write modes and automatic timeout to handle agent failures.

Webhooks: Receive real-time notifications when files are uploaded, modified, or accessed. Build reactive workflows without polling. Webhooks eliminate the directory listing race condition common in polling-based systems.

URL Import: Agents can import files from external sources (Google Drive, OneDrive, Box, Dropbox) without downloading locally. Useful for multi-cloud workflows or when agents run in serverless environments with no persistent disk.

Ownership transfer: An agent can build a complete workspace, populate it with files, and transfer ownership to a human user while keeping admin access. This lets agents prepare deliverables for human review or client handoff.

Intelligence Mode and RAG: When enabled on a workspace, Fast.io auto-indexes files for semantic search and Q&A. Agents can query workspace contents in natural language and receive cited answers, which supports knowledge-sharing across agent teams.

MCP integration: Fast.io provides 251 MCP tools via Streamable HTTP and SSE transport. MCP-compatible agents (Claude Desktop, Cursor, etc.) get zero-config file access with session state managed in Durable Objects.

OpenClaw integration: Install via clawhub install dbalve/fast-io for natural language file management with any LLM. No config files, no environment variables, just instant file operations.

Free agent tier: AI agents get their own accounts with 50GB free storage, 1GB max file size, and 5,000 monthly credits. No credit card required, no trial, no expiration. Same features humans get, programmatic access.

Architecture Patterns for Multi-Agent Storage

Design your storage layer to match your multi-agent workflow requirements.

Workspace per workflow: Create a dedicated workspace for each multi-agent workflow instance. Agents involved in that workflow share access to the workspace. When the workflow completes, archive or delete the workspace.

Benefits: Clean isolation between workflows, easy cleanup, clear access boundaries.

Use case: Processing customer support tickets where each ticket gets its own workspace with relevant agents.

Workspace per agent: Each agent gets a private workspace for its own files, plus access to shared workspaces for collaboration.

Benefits: Agents maintain state across workflows, clear ownership, agents can organize files how they prefer.

Use case: Research agents that accumulate knowledge over time and contribute to multiple projects.

Workspace per project: All agents working on a project share a single workspace. Use folders to organize by agent or file type.

Benefits: Simple, centralized view of all project files, easy for humans to audit.

Use case: Content production pipelines where multiple agents contribute to building a final deliverable.

Hybrid: folders within shared workspaces: Create agent-specific folders within a shared workspace. Each agent writes to its own folder but can read from others.

Benefits: Combines organization and sharing, reduces permissions overhead.

Use case: Data pipelines where each stage (ingest, validate, transform, export) gets a folder.

Best Practices for Multi-Agent File Systems

Follow these guidelines to build reliable multi-agent file workflows.

Use file identifiers, not file paths. Paths can change (files move, workspaces rename). Store file IDs in task metadata and resolve them to paths at runtime. Fast.io provides stable file IDs that persist across moves.

Make operations idempotent. Agents should produce the same output when processing the same input file multiple times. Use content-based naming (hash of input) or check if the output file already exists before processing.

Version your file formats. When agents produce structured files (JSON, CSV, etc.), include a version field. If the schema changes, downstream agents can detect and handle older versions gracefully.

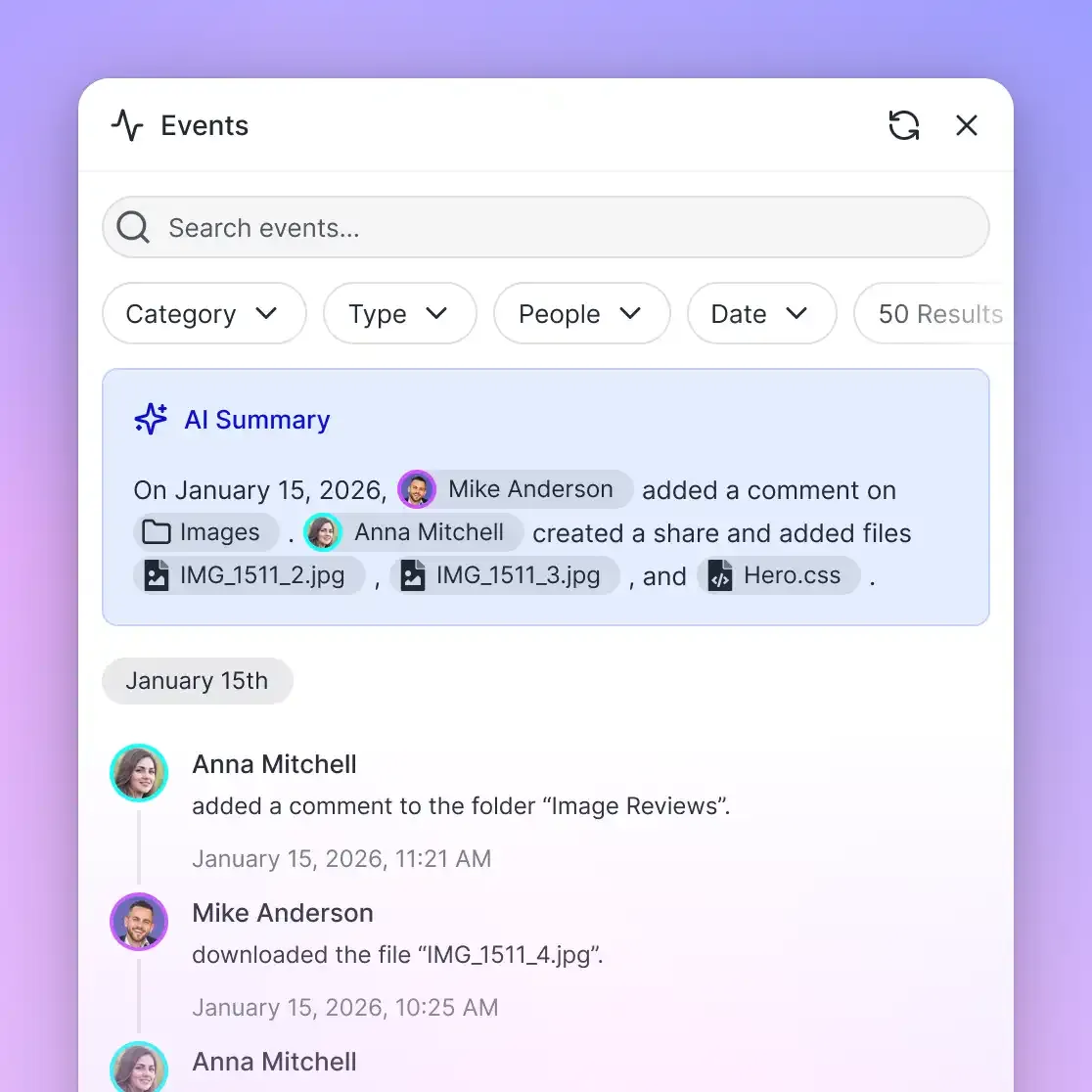

Log all file operations. Record when agents create, read, update, or delete files. Include agent ID, timestamp, file ID, and operation type. Fast.io provides automatic audit logs for all file events.

Set file retention policies. Decide how long intermediate files should be kept. Create cleanup jobs that delete old files after a retention period. Fast.io supports expiration dates on files and folders.

Use descriptive file names. Include agent name, timestamp, and operation in file names. Example: summarizer-[timestamp]-summary.txt. This makes debugging and audit trails much easier.

Add retry with exponential backoff. When file operations fail (lock held, network timeout, etc.), retry with increasing delays. Set a maximum retry limit to avoid infinite loops.

Monitor file system metrics. Track storage usage, file count, operation latency, and error rates. Set alerts for unusual patterns (sudden spike in files, high error rate, etc.).

Security and Access Control for Agent File Systems

Multi-agent systems need strong security to prevent unauthorized access and data leaks.

Principle of least privilege: Grant each agent access only to files it needs. Don't give all agents admin access to all workspaces. Use read-only permissions when agents only need to consume files.

Agent authentication: Each agent should have its own API credentials. Avoid shared API keys across multiple agents. If one agent is compromised, you can revoke its access without affecting others.

Workspace permissions: Use workspace-level permissions to group related agents. For example, a "data-processing" workspace accessible to ingestion, validation, and transformation agents, but not to external-facing agents.

Audit all agent actions: Log every file read, write, and permission change with the agent's identity. Audit logs help diagnose issues and detect malicious behavior.

Encrypt sensitive files: Use encryption at rest and in transit. Fast.io encrypts all files by default. For extra-sensitive data, encrypt files before upload using agent-managed keys.

Add approval workflows for high-risk operations: For operations like deleting large batches of files, transferring ownership, or changing permissions, require approval from a human or supervisor agent before executing.

Watermark agent-generated files: Embed metadata identifying which agent created or modified a file. This tracks provenance and detects unauthorized modifications.

Frequently Asked Questions

How do AI agents share data without passing files directly?

AI agents share data by storing files in centralized cloud storage and passing file identifiers (not the files themselves) between agents. One agent uploads a file and gets back a file ID, which it includes in the task metadata for the next agent. The downstream agent retrieves the file using that ID. This pattern keeps agents decoupled, handles large files efficiently, and provides a single source of truth for file state.

Can multiple AI agents access the same file simultaneously?

Yes, multiple agents can read the same file concurrently without conflicts. For write operations, use file locks to coordinate access. Fast.io provides file locking APIs where an agent acquires a lock before writing, which prevents other agents from modifying the file until the lock is released. Read locks allow multiple readers, while write locks are exclusive. Lock timeouts ensure crashed agents don't hold locks indefinitely.

What is agent-to-agent communication in multi-agent systems?

Agent-to-agent communication refers to how autonomous agents coordinate and share information. Common patterns include message passing (via queues or pub/sub), shared memory (databases or file storage), and direct API calls. File-based communication is popular for large datasets where agents upload files to shared storage and notify other agents via webhooks or task queues. This approach decouples agents and handles data too large for message payloads.

How do you prevent file conflicts in multi-agent systems?

Prevent file conflicts using file locks (acquire exclusive access before writing), optimistic locking (check file version before writing and retry if changed), atomic operations (uploads appear only when complete), and webhooks instead of polling (get notified immediately when files change). Fast.io provides file locks with automatic timeouts, atomic chunked uploads, and webhook notifications to prevent the most common conflict scenarios.

What storage system works best for multi-agent file sharing?

Cloud storage with programmatic access works best for multi-agent systems. Look for features like persistent storage (files don't expire), API access for all operations, file locks for conflict prevention, webhook support for reactive workflows, and usage-based pricing that doesn't charge per agent. Fast.io provides all of these with a free 50GB tier for AI agents, 251 MCP tools, built-in RAG, and ownership transfer to humans.

How does Fast.io's file locking work for AI agents?

Fast.io's file locking API lets agents acquire read or write locks on files via REST endpoints. Write locks are exclusive (only one agent can hold a write lock at a time), while multiple agents can hold read locks simultaneously. Locks have automatic timeouts so crashed agents don't permanently block files. Agents can check lock status before attempting to acquire, and can release locks explicitly when done. This prevents concurrent write conflicts in multi-agent workflows.

What is the difference between ephemeral and persistent agent storage?

Ephemeral storage (like OpenAI's Files API) deletes files after a period of inactivity or when agents are deleted. Persistent storage (like Fast.io) keeps files indefinitely until explicitly deleted. For multi-agent systems, persistent storage is critical because workflows often span hours or days, files are reused across multiple workflow runs, and audit trails require long-term file retention. Fast.io provides persistent storage with 50GB free for agents.

Can AI agents transfer file ownership to human users?

Yes, Fast.io supports ownership transfer where an agent creates an organization, builds workspaces and files, and then transfers ownership to a human user. The agent retains admin access after the transfer. This lets agents prepare complete deliverables (like data rooms, client portals, or project folders) and hand them off to humans for final review or client delivery. It's one of the key features for true human-agent collaboration.

Related Resources

Start with multi agent file access on Fast.io

Fast.io provides AI agents with persistent storage, file locks, webhooks, and 251 MCP tools. Get 50GB free storage and 5,000 monthly credits with no credit card required.