How to Set Up an AI Agent Sandbox Environment

An AI agent sandbox is an isolated environment where agents can execute code, access files, and perform actions without affecting production systems. This guide covers the four core components of agent sandboxing: compute isolation, file system boundaries, network controls, and storage limits. It also walks through a tiered deployment strategy that scales from development to production.

What Is an AI Agent Sandbox?

An AI agent sandbox is a controlled execution environment that isolates agent operations from production systems. The sandbox creates strict boundaries around what an agent can access, modify, and execute.

The core purpose is risk containment. When an agent runs code, reads files, or makes network requests, those actions happen inside the sandbox. If something goes wrong, a hallucinated command, a runaway loop, or an unexpected API call, the damage stays contained within the boundary.

A well-designed sandbox consists of four layers:

- Compute isolation: The agent runs in a separate process, container, or microVM with its own kernel

- File system boundaries: The agent can only read and write to designated paths, never system directories

- Network controls: Outbound requests are filtered to an allowlist of approved destinations

- Storage limits: Per-agent quotas prevent runaway disk usage from crashing shared infrastructure

According to Startup Hub's 2026 tiered sandbox research, sandboxed agents reduce security incidents by 90% compared to agents with unrestricted access. Sandboxing is a baseline requirement, not an optional hardening step.

Why AI Agents Need Sandboxes

AI agents are not like traditional software. They generate and execute code at runtime, make decisions based on probabilistic models, and can behave unpredictably when they hit edge cases outside their training data.

Non-deterministic behavior: The same prompt can produce different outputs on each run. An agent that works fine in testing might generate problematic code in production when it hits a new input pattern. Sandboxes contain the blast radius when this happens.

Privilege escalation risks: Agents often need file system access, network connectivity, and shell execution. Without sandboxing, a single vulnerability can cascade into full system compromise. A sandboxed agent that escapes its container still hits the next layer of defense.

Third-party code execution: Many AI agent architectures run code from external sources, including user prompts, plugins, and retrieved documents. Sandboxing creates a trust boundary between the agent runtime and untrusted input.

Audit and compliance: Enterprises need to prove what agents did and when. Sandboxes provide natural logging boundaries for every file access, command execution, and network request.

According to Koyeb's sandbox platform comparison, 78% of enterprise AI projects now require sandbox testing before production deployment.

Four Types of Sandbox Isolation

Not all sandboxes provide equal protection. The choice depends on your threat model and performance requirements.

Container-Based Isolation

Containers (Docker, Podman) provide process and file system isolation through Linux namespaces. They share the host kernel but maintain separate user spaces.

Strengths: Fast startup times (under 1 second), low overhead, familiar tooling, wide ecosystem support

Weaknesses: Kernel exploits can escape containment, shared kernel attack surface remains exposed

Best for: Development environments and trusted workloads where speed matters more than isolation depth.

gVisor Isolation

gVisor interposes a user-space kernel between the container and the host OS. System calls go through gVisor's Sentry process instead of hitting the host kernel directly.

Strengths: Reduced kernel attack surface, compatible with existing container tooling, no hardware virtualization required

Weaknesses: 10-30% performance overhead on syscall-heavy workloads, some syscall compatibility gaps

Best for: Running untrusted code while keeping container-based workflows intact.

MicroVM Isolation

MicroVMs (Firecracker, Kata Containers) run a lightweight virtual machine per workload. Each agent gets its own dedicated kernel instance with sub-100ms cold start times.

Strengths: Strong isolation equivalent to traditional VMs, fast boot, small memory footprint (as low as 5MB per VM)

Weaknesses: Higher per-instance resource usage than containers, more complex orchestration layer

Best for: Production deployments handling real user data or sensitive operations. According to Northflank's sandbox comparison, MicroVM isolation with sub-100ms cold starts is now the standard for production agent deployments.

Process-Level Isolation

Seccomp, AppArmor, and SELinux restrict which system calls a process can make without full containerization.

Strengths: Minimal overhead, works as an additional defense-in-depth layer on top of other methods

Weaknesses: Requires careful policy configuration, not a complete solution on its own

Best for: Adding restrictions to existing deployments as a supplementary security layer.

File System Boundaries for Agents

Agents need file access to be useful. They read documents, write outputs, and manage persistent state. The sandbox defines exactly which paths are accessible and what operations are allowed.

Read-Only Mounts

Mount input data and reference files as read-only. The agent can process documents but cannot modify originals or inject content back into source directories.

volumes:

- source: /data/documents

target: /input

read_only: true

Scoped Write Directories

Give each agent a dedicated output directory with strict quotas. Writes outside this path fail immediately.

volumes:

- source: /agent-workspaces/agent-123

target: /output

quota: 10GB

Block System Paths

Never expose /etc, /var, /home, or other system directories. Agents should not read host configuration, SSH keys, environment variables, or user data. As Claude Code's sandboxing documentation notes, filesystem isolation means no home directory access, no SSH keys, and no global configs.

Ephemeral Workspaces

Use temporary storage for intermediate files. When the sandbox terminates, the workspace is wiped clean. This prevents state leakage between runs.

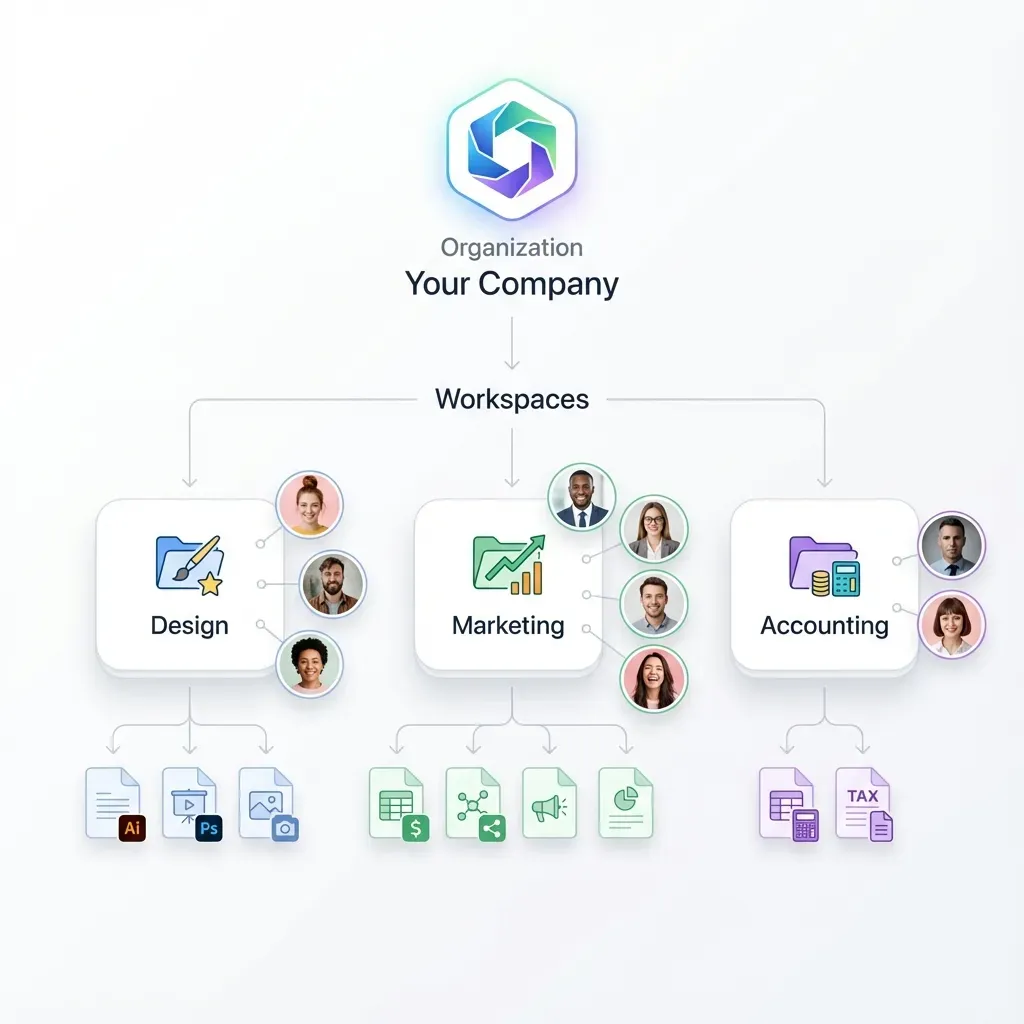

Persistent Agent Storage

For agents that need to retain files across sessions, Fast.io's agent workspaces provide isolated storage with built-in boundaries. Each agent gets its own workspace with configurable permissions and a credit-based quota system. Files belong to the organization, not the agent, so you maintain control even when agents upload and manage their own data. The free agent tier includes 50GB of storage with no credit card required.

Network Controls and Egress Filtering

Unrestricted network access is one of the highest-risk configurations for a sandboxed agent. A compromised or misbehaving agent could exfiltrate data, download malicious payloads, or interact with unintended services.

Default-Deny Egress

Start with all outbound connections blocked. Then explicitly allow only the specific destinations your agent needs.

network:

egress:

allow:

- api.fast.io:443

- api.openai.com:443

deny: "*"

This is the single most important network control. If you do nothing else, implement default-deny egress.

DNS Filtering

Restrict which domains the agent can resolve. This blocks DNS-based data exfiltration (encoding data in DNS queries) and limits the agent's ability to discover new endpoints.

Request Logging

Log every network request the agent makes. Capture timestamps, destination addresses, HTTP methods, and response codes. You will need this data for post-incident investigation.

Air-Gapped Sandboxes

For the most sensitive workloads, run sandboxes with zero network access. Load input data before execution begins, and extract outputs through a controlled file-based channel after the agent finishes.

The agent-infra/sandbox project demonstrates a practical implementation combining browser, shell, and file isolation with network controls in a single container.

Building a Tiered Sandbox Strategy

No single sandbox configuration works for every use case. The right approach matches isolation strength to risk level across three tiers.

Development Sandbox (Tier 1)

Isolation: Container with gVisor Data: Synthetic or anonymized only Refresh cycle: Daily Purpose: Rapid iteration during agent development

Developers get fast feedback loops. Security is lighter because no real data is at risk. Startup times under 1 second keep iteration fast.

Staging Sandbox (Tier 2)

Isolation: MicroVM (Firecracker) Data: Sampled production data with PII redacted Refresh cycle: Weekly Purpose: Integration testing with realistic data

This tier catches issues that only appear with production-like data patterns. Test your agent's file handling, multi-agent coordination, and storage behavior here before promoting to production.

Production Sandbox (Tier 3)

Isolation: MicroVM with hardware isolation (Intel TDX or AMD SEV) Data: Full production access, scoped to the agent's domain Refresh cycle: Persistent Purpose: Running live workloads

Maximum isolation for agents handling real user data. All actions are logged and auditable. File locks prevent conflicts when multiple agents access shared files at the same time.

For agents that need persistent storage across sandbox restarts, ephemeral local storage does not work. Cloud-based storage like Fast.io keeps agent data available regardless of which sandbox instance handles the next request.

Sandbox Monitoring and Observability

A sandbox without monitoring gives you no visibility into what went wrong. You need to see what agents do, when they do it, and how many resources they use.

Execution Logging

Capture each command the agent runs:

- Command text and arguments

- Working directory at time of execution

- Exit code (success or failure)

- Stdout/stderr output (truncated for large results)

- Wall-clock execution duration

Resource Tracking

Monitor CPU, memory, and I/O usage per sandbox instance. Set alerts for sustained high CPU (potential infinite loops), memory approaching limits (potential OOM kills), and unusual I/O patterns (potential data exfiltration via disk).

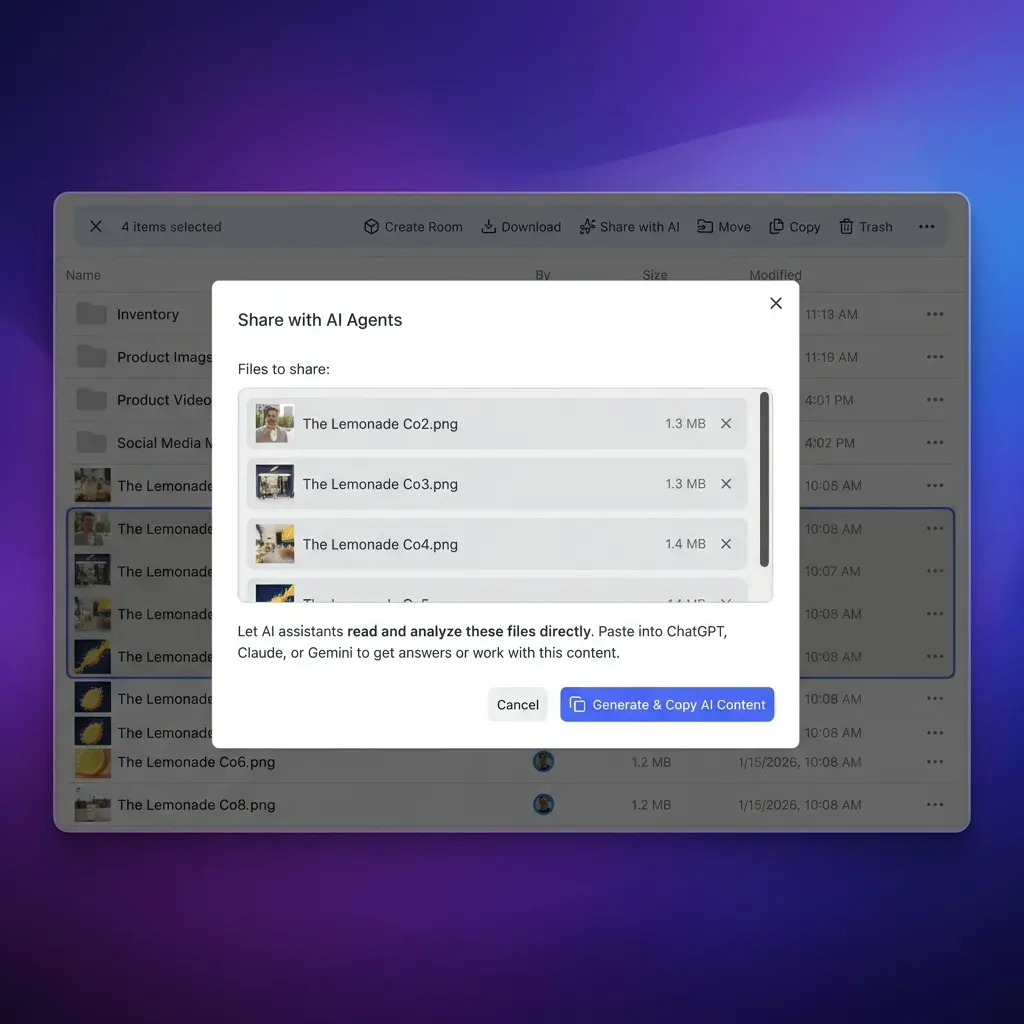

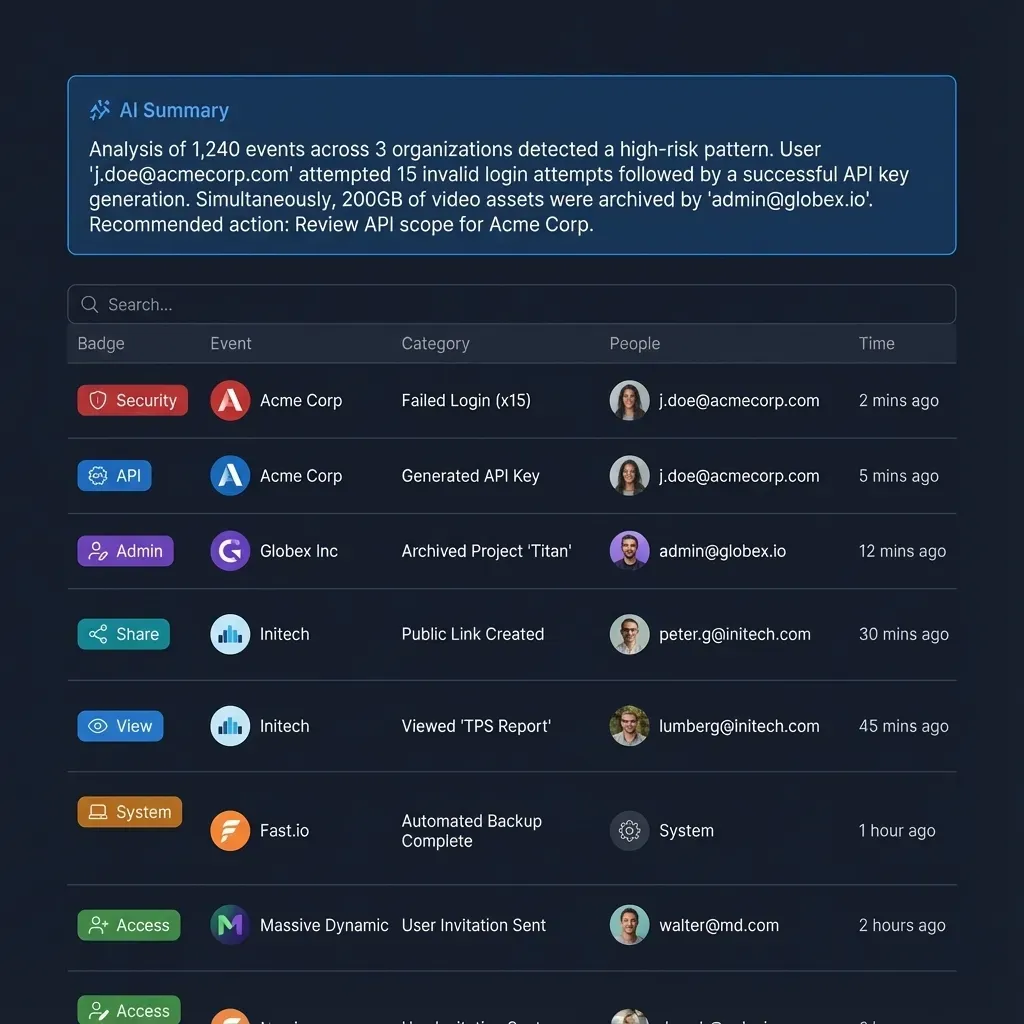

Access Auditing

Log all file reads, writes, and network requests. This gives you a full record for debugging agent behavior and meeting compliance requirements.

Fast.io's audit logs track agent activity across workspaces automatically. You can see which files an agent accessed, when, and what changes it made, without building custom logging infrastructure.

Alerting Thresholds

Configure alerts for these conditions:

- CPU usage above 80% for more than 60 seconds

- Memory usage above 90% of quota

- Network requests to non-allowlisted destinations (should never happen with default-deny)

- Storage usage above 85% of quota

- Agent execution time exceeding expected bounds

Common Sandbox Mistakes

Sandboxing fails when the implementation has gaps. These mistakes show up most in production incidents.

Running agents as root: Even inside a sandbox, root privileges increase the blast radius of any escape. Always create a dedicated non-root user with minimal permissions for agent execution.

Overly permissive volume mounts: Mounting the entire host file system defeats the purpose of sandboxing. Mount only the specific directories the agent needs, with the narrowest permissions possible (read-only where feasible).

Missing resource limits: Without CPU and memory cgroups limits, a single runaway agent can starve other processes on the host. Set explicit limits for each sandbox instance.

No network egress control: Allowing all outbound connections is a data exfiltration risk. Default to deny-all, then allow specific destinations based on what the agent actually needs.

Shared temporary directories: Multiple agents writing to the same /tmp leads to conflicts and information leakage between sandbox instances. Give each sandbox its own isolated temp directory.

Missing audit trails: If you can't prove what an agent did after the fact, you can't debug issues or satisfy compliance requirements. Log everything. Use immutable, append-only logs that agents can't modify or delete.

Ignoring storage boundaries: Agents without storage quotas can fill disks and crash infrastructure. Always set per-agent limits, whether through cgroups, filesystem quotas, or a credit-based system like Fast.io's 5,000 monthly credits on the free tier.

Frequently Asked Questions

What is an AI agent sandbox?

An AI agent sandbox is an isolated environment where agents execute code, access files, and perform actions without affecting production systems. It has four layers of protection: compute isolation (containers or microVMs), file system boundaries, network controls, and storage limits.

How do you test AI agents safely?

Test AI agents safely using a tiered sandbox strategy. Use lightweight containers with synthetic data for development, MicroVMs with sampled production data for staging, and fully isolated MicroVMs with hardware-backed isolation for production. Log all agent actions and set resource quotas at every tier.

Why do AI agents need sandboxes?

AI agents need sandboxes because they generate and execute code at runtime, behave non-deterministically, and often process untrusted input from users or external sources. Sandboxes contain the blast radius of errors or security exploits, provide audit trails for compliance, and prevent privilege escalation beyond the agent's intended scope.

What is the best isolation method for AI agents?

MicroVMs like Firecracker and Kata Containers provide the strongest isolation by running a dedicated kernel per workload. They boot in under 100ms and use as little as 5MB of memory per instance. For development or lower-risk scenarios, gVisor offers a good balance of security and performance with 10-30% overhead.

How do you handle file storage in an AI agent sandbox?

Mount input data as read-only, provide a quota-limited output directory, block all system paths, and use ephemeral storage for temporary files. For persistent storage across sessions, use cloud-based solutions like Fast.io, which provides agent workspaces with built-in quotas, organization-owned files, and 50GB free storage.

Related Resources

Sandboxed File Storage for Your AI Agents

Fast.io workspaces isolate agent file access with built-in storage quotas, audit logs, and organization-owned files. 50GB free, no credit card required.