How to Integrate Prefect with AI Agents

Prefect integrates AI agents into reliable data pipelines. Use Prefect to run AI agent workflows with retries, caching, and scheduling. Combine with Fast.io for persistent storage that survives flow failures. AI agent prefect integration solves key problems like ephemeral data and coordination failures. Agents produce files, summaries, or datasets. Prefect ensures they run on schedule. Fast.io MCP tools let agents upload outputs to shared workspaces where humans review them. This multiple-word guide covers setup, code examples, persistent patterns, deployment, scaling, troubleshooting, and comparisons. Developers get step-by-step instructions with full code.

What Is Prefect Orchestration for AI Agents?

Prefect is a workflow orchestration platform written in Python. It transforms ordinary Python functions into reliable, schedulable pipelines with built-in retry logic, caching, and real-time monitoring. The platform handles the complex task of coordinating multiple steps, managing dependencies, and ensuring tasks complete successfully even when individual components fail.

AI agents create unique challenges for workflow orchestration. Large language models can hallucinate outputs, API endpoints can timeout, and network issues can interrupt operations at any point. Traditional scripting approaches leave you manually restarting failed processes and hunting through console logs to understand what went wrong. Prefect solves these problems by treating each agent interaction as a task with explicit retry behavior, state management, and comprehensive logging.

The Prefect community has grown to nearly 30,000 engineers, with companies like Cash App relying on it for production machine learning pipelines. This adoption reflects the platform's maturity and reliability in handling real-world AI workloads at scale.

Why choose Prefect over cron or Apache Airflow for AI agent workflows? It's the Python-native control flow. Prefect tasks can make decisions based on agent outputs, branching dynamically rather than following rigid DAG definitions. When an agent returns unexpected results, Prefect can conditionally execute different downstream tasks. This flexibility matches how AI agents actually work in production.

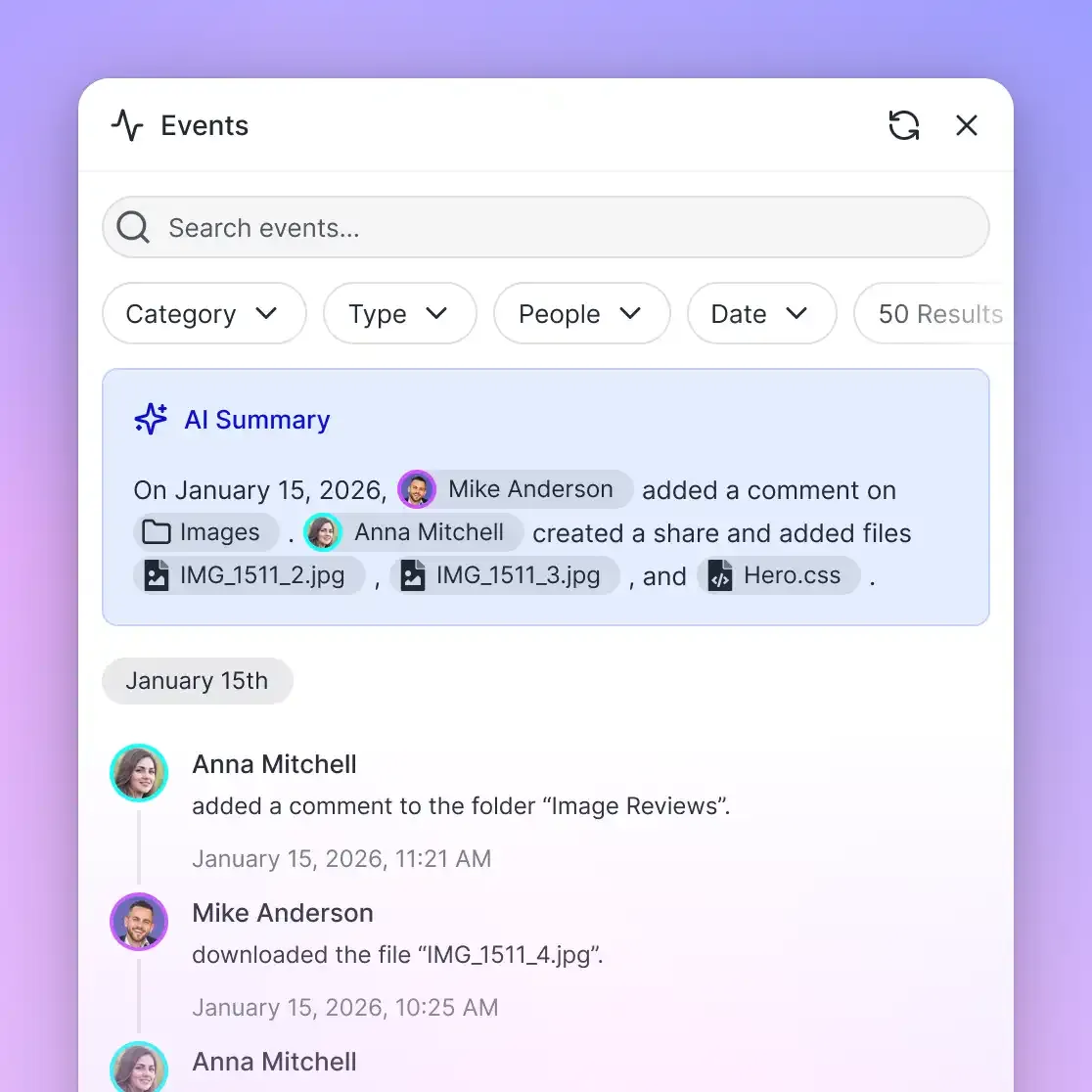

Event-driven triggers in Prefect also align well with agent needs. Rather than running on fixed schedules, flows can react to external events such as new data arriving, webhooks firing, or files being uploaded. Fast.io webhooks integrate naturally here, triggering Prefect flows when agents complete work and save outputs to workspaces.

The combination of Prefect for orchestration and Fast.io for persistent storage covers the full agent workflow. Prefect manages the execution logic while Fast.io provides durable workspaces where agent outputs survive flow failures, enabling human review and subsequent agent operations.

Helpful references: Fast.io Workspaces, Fast.io Collaboration, and Fast.io AI.

Core Benefits of Prefect for Agent Workflows

Prefect brings strong reliability to AI agent workflows. AI agents fail frequently due to LLM hallucinations, API errors, rate limits, and network issues. Without orchestration, you spend hours manually restarting failed jobs and piecing together what went wrong from scattered log files. Prefect handles this automatically with configurable retry policies, defaulting to multiple retries with exponential backoff, and can recover state so partial results are not lost.

Dynamic task mapping makes Prefect great for agent workflows. When an agent produces a list of items, Prefect can automatically parallelize processing across that list using the map operator. Each item gets its own task run, complete with independent retry logic and logging. This scales from processing a handful of items to thousands without code changes.

Event-driven triggers align perfectly with agent use cases. Prefect can start flows when Fast.io detects new file uploads, when webhooks fire from other systems, or on custom events. This reactive model means your agents respond to new data within seconds rather than waiting for cron-based schedules.

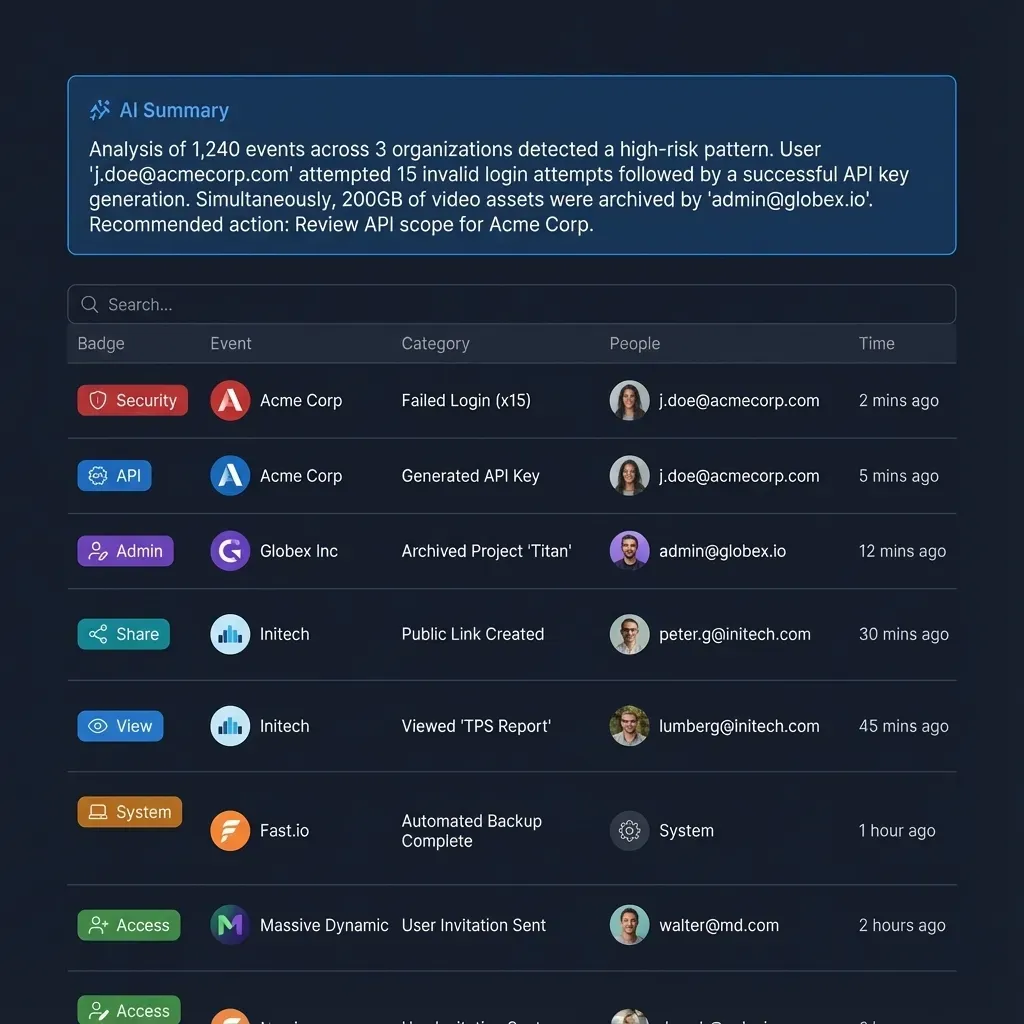

Visibility into agent workflows beats custom scripts. The Prefect UI displays directed acyclic graphs of your flows, real-time logs for each task, artifact previews, and cost tracking. When something fails, you can trace the error to the specific task that caused it, see the exact input parameters, and understand what the agent returned before the failure.

Fast.io MCP enhances this setup with multiple tools for agent persistence. Agents can upload files, list directories, query RAG-powered search, and acquire file locks for safe concurrent access. The free agent tier includes multiple storage and multiple credits monthly with no credit card required.

Outputs persist in shared workspaces where humans can review, edit, and build upon agent results. The ownership transfer feature lets agents create workspaces for clients and transfer control while retaining admin access for ongoing maintenance.

| Benefit | Without Prefect | With Prefect + Fast.io |

|---|---|---|

| Reliability | Manual restarts required | Automatic retries, state recovery |

| Visibility | Scattered console logs | Rich UI, task tracing |

| Persistence | Ephemeral, lost on failure | Durable workspaces with RAG |

| Scale | Local process limits | Cloud workers, parallel mapping |

| Triggers | Cron-only scheduling | Event-driven, webhooks |

This architecture scales from development laptops to production clusters without fundamental changes to your flow code.

Step-by-Step Setup for AI Agent Prefect Integration

Follow these steps for ai agent prefect integration. Assumes Python 3.multiple+, OpenAI API key, Fast.io account.

Step 1: Install dependencies Create virtualenv, install Prefect multiple, OpenAI, OpenClaw.

python -m venv prefect-agent-env

source prefect-agent-env/bin/activate # macOS/Linux

### or prefect-agent-env\\Scripts\\activate on Windows

pip install prefect openai 'clawhub[fastio]'

prefect profile create agent-workflow

prefect profile set-default agent-workflow

Step multiple: Set up Fast.io Sign up at Fast.io agent tier. Free multiple, multiple workspaces. Get API key from settings.

Set env vars:

export FASTIO_API_KEY=your_key

export OPENAI_API_KEY=your_openai_key

Install skill:

clawhub install dbalve/fast-io

Step 3: Write your first agent flow

Create agent_flow.py. Use @task for agent calls with retries. Fast.io MCP for persistence.

from prefect import flow, task

from openai import OpenAI

from clawhub.skills import fast_io # OpenClaw MCP tool

@task(retries=3, retry_delay_seconds=10)

def generate_content(prompt: str) -> str:

\"\"\"Agent task: generate report, save to Fast.io.\"\"\"

client = OpenAI()

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

content = response.choices[0].message.content

### Persist output

path = f"reports/{task.run_id()}.md" # Unique per run

fast_io.upload_file(path, content.encode('utf-8'))

url = fast_io.get_file_url(path)

return url

@task

def notify_team(url: str):

\"\"\"Optional: share link via email/slack.\"\"\"

print(f"Report at {url} - review in Fast.io workspace")

@flow(name="daily-agent-report")

def agent_pipeline():

url = generate_content("Analyze Q1 sales: trends, anomalies, recommendations")

notify_team(url)

if __name__ == "__main__":

agent_pipeline()

Step 4: Test locally

python agent_flow.py

Check Prefect UI (localhost:multiple), Fast.io workspace for report.md.

Step 5: Deploy to Prefect Cloud

prefect cloud login

agent_pipeline.deploy(name="daily-report-prod", schedule={"cron": "0 9 * * 1-5"})

Runs weekdays 9AM. Monitor in app.prefect.cloud.

Step 6: Trigger manually/API Use Prefect API or webhooks from Fast.io on file changes.

Persistent Storage Patterns with Fast.io MCP

Many orchestration platforms treat storage as an afterthought, leaving agent outputs in ephemeral containers that disappear when flows complete. Fast.io workspaces solve this by providing persistent storage that survives Prefect retries, worker crashes, and flow restarts. This persistence enables patterns that would otherwise require significant custom infrastructure.

Key Patterns for Agent Persistence:

Artifact Upload Pattern: Save every agent output to a Fast.io workspace immediately after generation. Use the Prefect run ID in the file path to create automatic versioning. When flows retry, new run IDs generate new files rather than overwriting previous attempts. This creates a complete audit trail of every agent execution.

RAG Query Pattern: Enable Intelligence Mode on workspaces containing agent outputs. Once enabled, Fast.io automatically indexes uploaded files and makes them searchable through natural language queries. When asking "Summarize the anomalies in the Q1 report," Fast.io returns answers with citations pointing to the specific source files. This turns raw agent outputs into queryable knowledge bases.

File Lock Pattern: Multi-agent systems often have multiple workers processing the same workspace. Without coordination, concurrent writes can corrupt data. The acquire_lock and release_lock MCP tools serialize access to critical sections. Agents acquire locks before modifying shared data structures, ensuring safe concurrent execution.

Webhook Trigger Pattern: Configure Fast.io webhooks to notify Prefect when new files arrive. This creates a reactive pipeline where agent outputs automatically trigger downstream analysis flows. Rather than polling for new data, your system responds within seconds of file creation.

Ownership Transfer Pattern: When agents build deliverables for clients, the ownership transfer feature provides a clean handoff. Agents create workspaces, populate them with outputs, and transfer ownership to human clients. The agent retains admin access for ongoing maintenance while the client gains full control. This pattern works well for agency workflows where agents produce client deliverables.

Multi-Agent Pipeline Example A complete agent pipeline might include three distinct agents: a data extraction agent that pulls information from external sources, an analysis agent that processes raw data into insights, and a review agent that summarizes findings for human consumption. Each agent writes outputs to the shared workspace, and downstream agents can query previous outputs through RAG.

from prefect import flow, task

from clawhub.skills import fast_io

@task

def extract_data(source_url: str) -> str:

### Simulate ETL agent

data = "sales_data_2026_q1.csv content"

path = "data/raw.csv"

fast_io.upload_file(path, data.encode())

return path

@task

def analyze(path: str) -> str:

lock = fast_io.acquire_lock(path)

try:

### Agent analysis

insights = "Top product: Widget A up 25%, Region X down 10%"

summary_path = "analysis/summary.md"

fast_io.upload_file(summary_path, insights.encode())

return summary_path

finally:

fast_io.release_lock(lock)

@task

def rag_review(summary_path: str) -> str:

### Use Fast.io RAG

rag_query = fast_io.query_files("Key insights?", paths=[summary_path])

fast_io.upload_file("review/rag.md", rag_query.encode())

return "review complete"

@flow

def pipeline():

raw = extract_data("sales_source")

summary = analyze(raw)

rag_review(summary)

Deploy this pipeline with pipeline.deploy(). All artifacts accumulate in a single workspace where humans can join, browse outputs, and chat with RAG to explore results. This addresses the content gap around persistent, coordinated storage for orchestrated agents.

Deployment, Monitoring, and Scaling

Deploy flows to Prefect Cloud for production environments. The free tier accommodates small teams and development work, while enterprise plans scale to handle millions of monthly runs. The transition from local development to cloud deployment requires minimal code changes.

Deployment Steps:

Authenticate: Run

prefect cloud loginto connect your local environment to Prefect Cloud. This creates the link between your local flow definitions and the cloud execution environment.Create Work Pool: Work pools determine where flows execute. The process worker type works well for lightweight agent tasks. For GPU-intensive inference, choose Kubernetes or Docker workers that can provision the necessary compute resources.

Deploy Flow: Use

flow.deploy('prod-run', infra='docker')to create a production deployment. Specify the work pool, schedule, and any environment variables needed at runtime.Configure Scheduling: Prefect supports cron expressions, interval-based scheduling, and RRule for complex recurrence patterns. Schedule flows for business hours, weekdays only, or specific dates matching your operational needs.

Set Up Automations: Automations trigger flows based on events rather than schedules. Configure triggers for 'file-uploaded', 'task-failed', or custom events from Fast.io webhooks.

Monitoring in Production:

The Prefect UI provides full monitoring. The runs dashboard shows execution history, success rates, and duration metrics. Each flow run displays detailed logs from every task, making it easy to spot where failures occurred and what inputs caused the issue.

Artifact tracking lets you preview outputs directly in the UI. When agents generate reports, images, or datasets, Prefect stores references to these outputs and displays previews without requiring you to navigate to Fast.io.

Cost tracking estimates compute spend per flow run, helping you understand the economics of your agent operations. This becomes valuable as agent usage scales across the organization.

Set up alerts through Slack or email notifications for failure events. Critical flows can trigger immediate alerts while lower-priority flows might use daily summary emails.

Scaling Considerations:

Work pool concurrency limits control how many flow runs execute simultaneously. Set appropriate limits based on your API rate limits and downstream system capacity. For GPU-intensive agent workloads, auto-scaling configurations can provision additional workers during peak demand and scale down during quiet periods.

Fast.io Webhook Integration:

Configure Fast.io to POST to the Prefect API whenever files change in your workspaces. This creates event-driven agent pipelines that respond to new data within seconds rather than waiting for scheduled runs.

Example webhook trigger flow:

@flow

def webhook_trigger(event: dict):

if event['type'] == 'file_uploaded':

analyze_new_file(event['path'])

Set the Prefect webhook URL in Fast.io workspace settings to enable this integration. The webhook payload includes the file path, workspace ID, and event type, providing everything needed to route the event to the appropriate analysis flow.

Cost Efficiency:

Prefect pricing follows a pay-per-task model. The free tier includes substantial monthly task allocations suitable for development and small production workloads. Fast.io provides multiple credits monthly on the free agent tier, covering approximately multiple storage and multiple bandwidth. Both platforms scale cost-effectively as agent operations grow.

Troubleshooting and Best Practices

Agent pipelines encounter predictable issues. Understanding common failure modes and implementing defensive patterns prevents production incidents and reduces debugging time when problems occur.

Common Problems and Solutions:

| Issue | Symptoms | Fix |

|---|---|---|

| LLM Timeout | Task fails after 60 seconds with no response | Increase timeout parameter, use retries=5 for reliability, switch to faster models like gpt-4o-mini for production workloads |

| Rate Limits | 429 error from OpenAI or other providers | Implement exponential backoff with @task(retries=3, retry_jitter=True), cache prompts to reduce API calls, consider upgrading to higher rate limit plans |

| File Conflicts | Multiple agents overwrite same data | Use fast_io.acquire_lock(path) before processing, release_lock after, implement idempotent file naming with run IDs |

| Quota Exceeded | Fast.io credits depleted mid-flow | Monitor credit usage in the Fast.io dashboard, upgrade to paid tier or optimize by compressing files before upload |

| Prefect Worker Crash | Flow stops mid-execution, partial results | Design idempotent tasks that can safely retry, checkpoint intermediate state to Fast.io workspaces |

Best Practices for Production Agent Flows:

Log Prompts and Outputs: Store every LLM prompt and response as Prefect artifacts. This creates a complete record for debugging when agents produce unexpected results. Use the artifact API to attach JSON or markdown content that displays nicely in the Prefect UI.

Version Files Systematically: Use consistent path patterns that include version numbers. Structure outputs as v{version}/output/{timestamp}/{run_id}.json to enable historical lookup and comparison across runs.

Implement Human-in-the-Loop: For high-stakes agent decisions, pause flows for human approval before proceeding. Prefect's pause/resume functionality lets flows wait for external input. Build a simple UI that shows pending approvals and allows humans to approve, reject, or modify parameters.

Test with Prefect's Test Mode: Prefect provides testing utilities that let you run flows in mock environments without calling external APIs. Write tests that verify flow logic and task dependencies before deploying to production.

Use Prefect Blocks for Secrets: Store API keys and credentials in Prefect Blocks rather than environment variables. Blocks provide encrypted storage and access control. Create blocks with prefect block create --type secret and reference them in your flow code.

Implement Idempotency: Design tasks to produce the same result regardless of how many times they run. Check if work already exists before executing:

@task

def safe_agent(prompt: str) -> str:

path = f"outputs/{hash(prompt)}.json"

if fast_io.file_exists(path):

return fast_io.get_file_url(path)

### Generate new content

result = generate_content(prompt)

fast_io.upload_file(path, result.encode())

return fast_io.get_file_url(path)

Error Handling and Logging:

Wrap agent calls in comprehensive error handling that captures context for debugging:

@task

def safe_agent(prompt: str):

try:

return generate_content(prompt)

except Exception as e:

### Log error to Fast.io for human review

error_log = f"Failed prompt: {prompt}

Error: {str(e)}"

fast_io.upload_file("errors/log.md", error_log.encode())

### Re-raise so Prefect can retry

raise

This ensures errors don't disappear into void and that retry logic has the context needed to succeed on subsequent attempts.

Frequently Asked Questions

Prefect for AI agents?

Yes. Prefect orchestrates AI agents with retries, caching, and dynamic mapping. It handles LLM unreliability through automatic retry logic and state recovery. Pairing Prefect with Fast.io provides persistent storage where agent outputs survive flow failures and become available for human review.

Agent Prefect setup?

Install dependencies with `pip install prefect clawhub`, then install the Fast.io skill using `clawhub install dbalve/fast-io`. Write @flow decorated functions with @task decorated agent calls, configure retries, and deploy to Prefect Cloud with `flow.deploy()`.

What storage for Prefect agent workflows?

Fast.io provides multiple free storage with multiple MCP tools specifically for agent workflows. Key features include RAG-powered search, file locks for concurrent access, and workspaces that survive Prefect retries. The free tier includes multiple credits monthly with no credit card required.

Prefect vs Airflow for agents?

Prefect uses pure Python for workflow definition, enabling dynamic branching based on agent outputs. Airflow requires static DAGs defined in YAML or Python with less flexibility for runtime decisions. Prefect's Python-native approach better matches agent workflows that need to adapt based on LLM responses.

Handle rate limits in Prefect agents?

Configure exponential backoff using @task(retries=multiple, retry_delay_seconds=[multiple,multiple]) and enable jitter with retry_jitter=True. Cache prompts and responses to avoid redundant API calls. Monitor usage in provider dashboards and consider upgrading to higher rate limit tiers for production workloads.

Scale Prefect agent flows?

Work pools in Prefect Cloud scale from single workers to hundreds of concurrent executors. Use Docker or Kubernetes work pools for GPU-intensive inference. Set concurrency limits per pool to match API rate limits. The free tier supports development while paid plans handle enterprise-scale agent operations.

Integrate Fast.io webhooks?

Set your Prefect API webhook URL in Fast.io workspace settings. Configure webhooks to trigger on file upload or modify events. Prefect receives the webhook payload and can route to specific flows based on event type, enabling reactive agent pipelines.

Cost of agent prefect integration?

Prefect uses a pay-per-task pricing model with a generous free tier. Fast.io provides multiple credits monthly on the free agent tier, covering approximately multiple storage and multiple bandwidth. Both platforms scale cost-effectively, with Fast.io credits lasting longer when compressing files before upload.

Related Resources

Run Agent Prefect Integration workflows on Fast.io

50GB free storage, 251 MCP tools, no credit card needed. Agents produce outputs that persist. Great for agent Prefect workflows. Built for agent prefect integration workflows.