How to Automate AI Agent Infrastructure

AI agent infrastructure automation helps you scale agent workflows from one bot to thousands. Most teams focus on compute but forget often overlook storage that lasts. This guide shows how to set up self-managing infrastructure for your agents using modern tools and Fast.io's intelligent workspaces.

What Is AI Agent Infrastructure Automation?

AI agent infrastructure automation is the practice of using code and autonomous systems to provision, manage, and scale the environments where AI agents run. Unlike traditional software that runs on static servers, AI agents are dynamic: they spin up to perform a task, may require access to specialized GPUs for inference, and often need to persist state across ephemeral sessions. Automating this lifecycle involves defining the entire stack (compute, networking, security, and storage) as code (IaC), allowing agents to deploy themselves without human intervention.

The Core Components of Automated Infra

To build a autonomous system, you must automate four distinct layers:

- Compute Provisioning: Automatically requesting CPU and GPU resources when an agent needs to run. This often involves orchestrators like Kubernetes or serverless platforms that can instantiate containers in seconds.

- Network Configuration: Dynamically assigning IP addresses, opening firewalls, and configuring load balancers so agents can communicate with each other and the outside world securely.

- Security Policies: Enforcing "least privilege" access controls programmatically. An agent analyzing financial data should automatically be deployed into a restricted network zone with limited API access.

- Storage Mounting: Attaching persistent volumes or connecting to object storage so the agent can save its work. This is often the most neglected layer, leading to data loss when containers cycle.

From Manual Ops to Autonomous Swarms

In a manual setup, a DevOps engineer provisions a virtual machine, installs dependencies, and deploys the agent code. This works for five agents but fails at fifty. In an automated setup, the "master" agent or orchestrator detects a new task queue, calls a cloud API to provision the necessary infrastructure, deploys the worker agent, and tears it all down when the job is done.

According to Hutte.io, organizations using AI in DevOps have reported a multiple% reduction in deployment failures. This shows the reliability gains of removing manual configuration from the loop. When infrastructure is code, every agent starts in a pristine, identical environment, eliminating "it works on my machine" bugs.

Helpful references: Fast.io Workspaces, Fast.io Collaboration, and Fast.io AI.

Why You Must Automate Agent Infrastructure

As AI agent fleets grow, the complexity of managing them increases exponentially. Automation does more than save time. You need it to keep things reliable, secure, and affordable.

Scale and Elasticity Manual infrastructure is static. You pay for servers whether they are used or not. Automated infrastructure is elastic. When your agent fleet needs to handle a surge of tasks, such as processing end-of-month financial reports, the system automatically provisions hundreds of instances. When the queue empties, it terminates them. This elasticity ensures you can handle peak loads without keeping massive capacity on standby.

Reliability and Self-Healing AI agents are complex software that can hang, crash, or enter infinite loops. Automated infrastructure introduces "self-healing" capabilities. Health checks track CPU use, memory, and API responses. If an agent becomes unresponsive, the orchestrator kills the container and spins up a fresh replacement instantly. Data from Fareastfirst indicates that DevOps-using companies report 96% faster recovery times compared to traditional operations. For mission-critical agents, this difference means minutes of downtime versus hours.

Cost Optimization The cloud is expensive, especially GPU compute. Running a GPU instance multiple/multiple for an agent that only works multiple hours a day is a waste of capital. Automation enables "spot instance" usage, where agents run on cheaper, interruptible spare capacity. Because the infrastructure code handles interruptions by restarting the job on a new node, you can reduce compute costs by up to multiple%.

Security Compliance In manual setups, security rules are often applied inconsistently. One server might have an open port that another doesn't. With automated infrastructure, security is defined in code and applied universally. Every agent is born with the exact permissions it needs and nothing more. This helps enterprises in regulated fields stay compliant.

The Missing Link: Persistent State and Storage

While compute automation handles where the agent runs, it fails to address what the agent remembers. AI agents are fundamentally stateful entities, they have memories, context, and generated outputs. Yet, they often run in ephemeral containers that are wiped clean upon termination. This causes problems with data persistence.

Why Local Storage Fails Relying on the local disk of a container is a recipe for data loss. When a spot instance is reclaimed by the cloud provider or a Kubernetes pod is rescheduled, that local disk vanishes. Your agent's logs, intermediate reasoning steps, and final reports are lost forever.

S3 vs. Fast.io for Agents Object storage like S3 keeps data safe but is awkward for agents to use. It requires complex API calls (PutObject, GetObject), has no concept of "files" (only objects), and offers no easy way for humans to verify what the agent wrote without downloading everything.

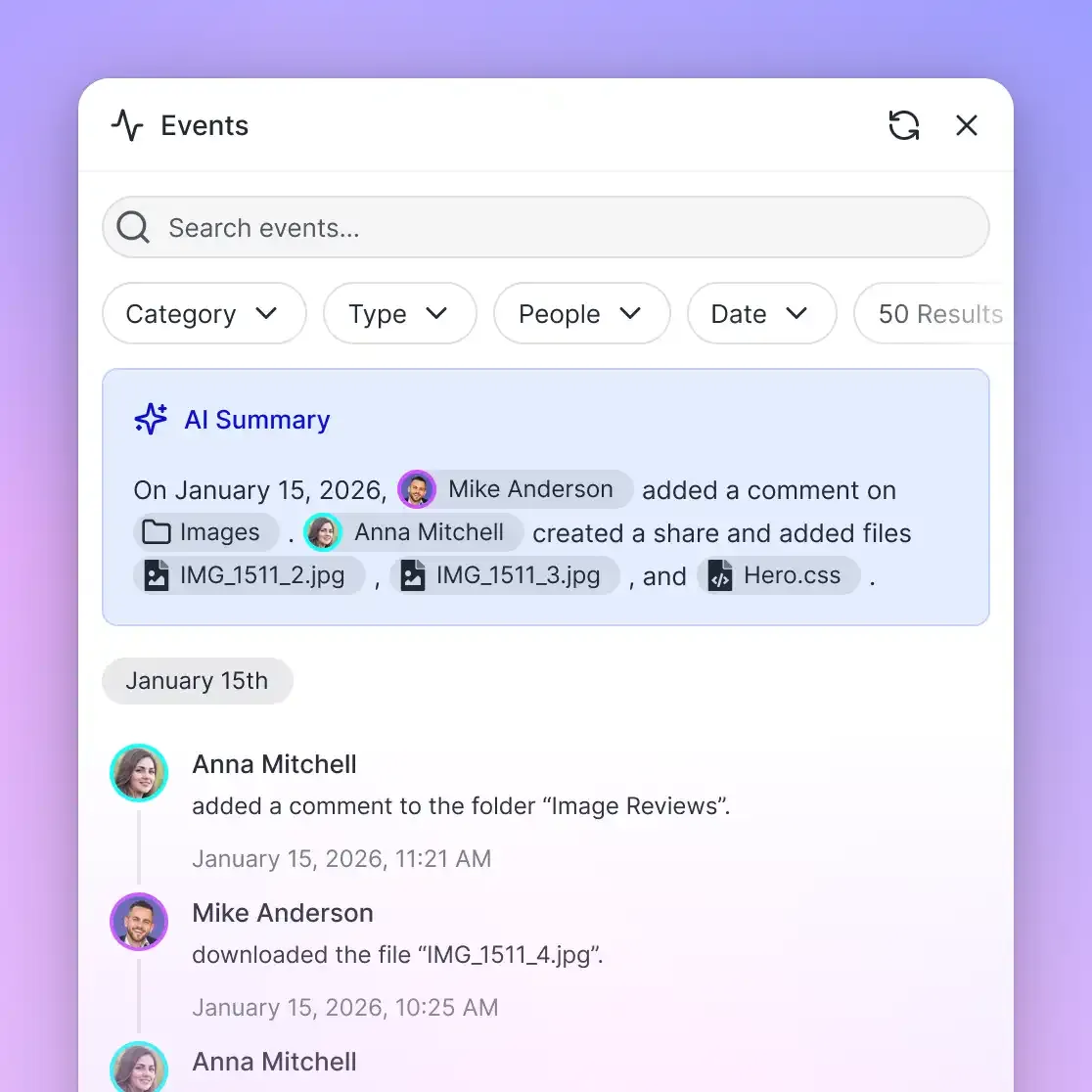

The Fast.io Advantage Fast.io provides persistent file storage that agents can share. * Decoupled State: Agents write to Fast.io workspaces. If the compute node dies, the data remains safe. A replacement agent can spin up, read the workspace, and resume exactly where the previous one left off.

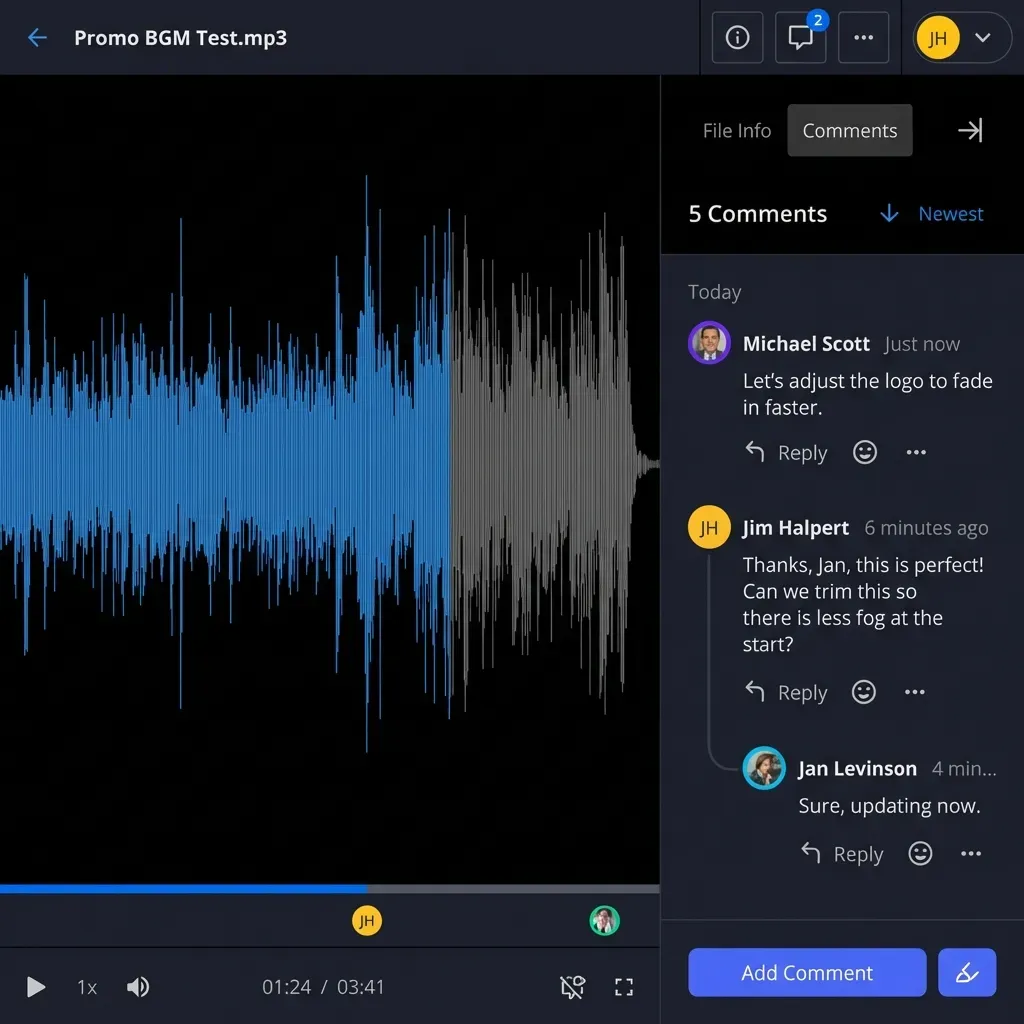

- Intelligent Indexing: Unlike a dumb hard drive, Fast.io automatically indexes every file an agent uploads. This allows other agents to search that content semantically ("Find the contract analysis from yesterday") rather than just by filename.

- Human Oversight: Because Fast.io is also a user-friendly workspace, human supervisors can log in and view the files an agent is creating in real-time. This "human-in-the-loop" visibility is impossible with raw database storage or S3 buckets.

Step-by-Step: Building an Automated Agent Pipeline

Building a reliable automated pipeline requires connecting your compute, orchestration, and storage layers into a cohesive system. Here is a blueprint for deploying your first automated agent fleet.

1. Containerize with Docker Best Practices

Your agent must be packaged as an immutable artifact. Create a Dockerfile that installs your agent's runtime (Python, Node.js) and dependencies.

- Tip: Use multi-stage builds to keep your final image small. A smaller image means faster boot times when scaling up.

- Tip: Inject configuration via environment variables, not hardcoded files. This allows the same image to run in "dev", "test", and "prod" environments.

2. Define Infrastructure as Code (IaC)

Stop clicking buttons in the AWS or Google Cloud console. Use Terraform or Pulumi to define your environment. You need to script:

- The Network: A Virtual Private Cloud (VPC) to isolate your agents.

- The Compute: An Auto Scaling Group (ASG) or Kubernetes cluster.

- The Identity: IAM roles that grant your agents permission to access specific APIs.

3. Integrate Persistent Storage

This is where Fast.io comes in. Instead of mounting a complex EBS volume, configure your agent to use the Fast.io MCP server or API. Pass your Fast.io API key as a secure secret.

- Action: When the agent starts, it should check a specific Fast.io folder for "jobs" (input files).

- Action: When the agent finishes, it uploads the result to an "output" folder.

4. Implement Orchestration Logic

Deploy your agents to a platform like Kubernetes. define a Deployment manifest that specifies how many replicas you want. Add livenessProbe and readinessProbe definitions.

- Liveness Probe: "Is the agent still running?" If no, restart it.

- Readiness Probe: "Is the agent ready to take work?" If yes, send traffic.

5. Set Up CI/CD Deployment

Connect your Git repository to a CI/CD tool like GitHub Actions.

- On Push: Run unit tests on the agent code.

- On Merge: Build the new Docker image, push it to a registry, and update the Terraform/Kubernetes definition to reference the new version.

Common Pitfalls in Agent Infrastructure

Even experienced teams make mistakes when automating agent infrastructure. Avoid these common traps to ensure your fleet runs smoothly.

Over-Privileging Agents

Do not give your agent "Admin" access to your cloud account. If the agent is compromised, the attacker controls your entire infrastructure. Use granular permissions: allow the agent to read from this specific bucket and write to that specific Fast.io workspace, and nothing else.

Ignoring Rate Limits

When you automate scaling, you can accidentally create a DDoS attack against your own dependencies. If multiple agents spin up and all hit the OpenAI API simultaneously, you will hit rate limits instantly. Implement "backoff and retry" logic and use a shared token bucket to manage API consumption across the fleet.

Flying Blind (Lack of Observability)

An automated system that fails silently is a nightmare. You need centralized logging. Since agents are ephemeral, they cannot log to local files. Configure them to stream logs to a service like CloudWatch, Datadog, or even a text file in a Fast.io "logs" workspace for simple, persistent debugging.

State Fragmentation

Don't let agents store state in random places (some in a DB, some in local files, some in memory). Enforce a "single source of truth" policy where all durable artifacts are saved to your persistent storage layer (Fast.io). This makes debugging and auditing easier.

Top Tools for Agent Infrastructure

The ecosystem for agent infrastructure is evolving rapidly. These tools are the current industry leaders for multiple.

Compute & Orchestration

- Kubernetes: The go-to tool for container orchestration. Complex but powerful.

- Fly.io / Modal: Developer-friendly platforms ideal for running agents close to users or on specialized GPUs without managing servers.

- Terraform: The universal language for infrastructure as code.

Storage & Persistence

- Fast.io: The premier choice for agent workspaces. It combines the ease of a file system with the power of an MCP server (multiple tools). It uniquely offers a free tier for agents (multiple storage) and built-in RAG, making it better than plain object storage.

CI/CD & Operations

- GitHub Actions: The standard for automating build and deploy pipelines.

- Vault (HashiCorp): Essential for managing the secrets (API keys) your agents need to function.

Observability

- LangSmith: A specialized tool for tracing the execution paths of AI agents, crucial for understanding why an agent made a specific decision.

- Prometheus: For monitoring the health of the underlying infrastructure (CPU, Memory, Disk).

Frequently Asked Questions

How do I automate AI agent deployment?

Automate deployment by containerizing your agent with Docker and using a CI/CD pipeline (like GitHub Actions) to build and push images. Use Infrastructure as Code (Terraform) to define the environment, and an orchestrator (Kubernetes) to manage the running containers.

What is the best storage for AI agents?

Fast.io is the best storage for AI agents because it provides a persistent, intelligent workspace that survives container restarts. Unlike S3, it offers an MCP server for natural language integration and built-in RAG, allowing agents to search and cite their own data.

Can AI agents repair their own infrastructure?

Yes. By using orchestrators with self-healing capabilities (like Kubernetes), crashed agents are automatically restarted. Advanced setups allow agents to trigger infrastructure code to provision new resources when they detect performance bottlenecks.

What are the benefits of agent infrastructure automation?

Automation provides three main benefits: scalability (handling thousands of agents), reliability (96% faster recovery from failures), and cost efficiency (using ephemeral spot instances to reduce cloud bills).

How does Fast.io help with agent automation?

Fast.io acts as the persistent memory layer. It allows ephemeral agents to save their work to a secure, human-accessible workspace. This ensures data is never lost when an agent spins down and enables smooth handoffs between agent instances.

Related Resources

Give Your Agents a Permanent Home

Stop losing agent data to ephemeral containers. Get 50GB of persistent, intelligent storage for your AI agents—free forever. Built for agent infrastructure automation workflows.